AI 2027: Media, Reactions, Criticism

We recognize our supporters and respond to our critics

It’s been a crazy few weeks here at the AI Futures Project. Almost a million people visited our webpage; 166,000 watched our Dwarkesh interview. We were invited on something like a million podcasts. Team members gave talks at Harvard, the Federation of American Scientists, and OpenAI. People even - this is how you really know you’ve made it - made memes about us.

There’s no way we can highlight or respond to everyone, but here’s a selection of recent content.

Podcasts, Shows, And Interviews

NYT’s Hard Fork (Daniel)

Glenn Beck (Daniel)

Win Win (Daniel)

Control AI interview (Eli)

Dwarkesh Patel (Daniel and Scott)

Lawfare (Daniel and Eli)

And there’s more to come - watch for Daniel soon on Next Big Idea.

Bets

We asked skeptics to challenge us to bets. Many people took us up on this, and we’re gradually working through our pile of offers, but so far we’ve officially confirmed two:

Diego Basch bets that AIs won’t reach our superhuman coder milestone by the end of 2028. We get $100 if they do, he gets $100 if they don’t. The bet will be judged by Guillermo Rauch.

Jan Kulveit bets that the new scenario won’t be as accurate as Daniel’s old What 2026 Looks Like post. We get $800 if it is (as of April 2028), Jan gets $100 if it isn’t The bet will be judged by some subset of Zvi Mowshowitz, Philip Tetlock, Daniel Eth, and the top three AIs as of the resolution date. I hate to disagree with Daniel, but offering 8:1 odds here is criminal and we’re basically giving money away to Jan.

We’re still looking for more bets, but we’re also concerned that most of the bets offered reduce to “will there be an intelligence explosion in 2027-2028 or not?” We can only bet on that one so many times before it gets boring, so we’re most interested in bets that don’t correlate perfectly with that. We’d be especially interested in conditionals, like “if there’s an intelligence explosion in 2027 - 2028, X will happen”.

To offer us a bet, either use the form here or @ one of us on Twitter.

Memes

Somebody put a dancing anime girl in front of our info panel..

…and it got played at an SF bar hosting an “AGI Readiness Happy Hour”:

This is exactly what we were going for when we wrote the scenario. Everything else - attention from academics, policy-makers, what have you - is just icing on the cake.

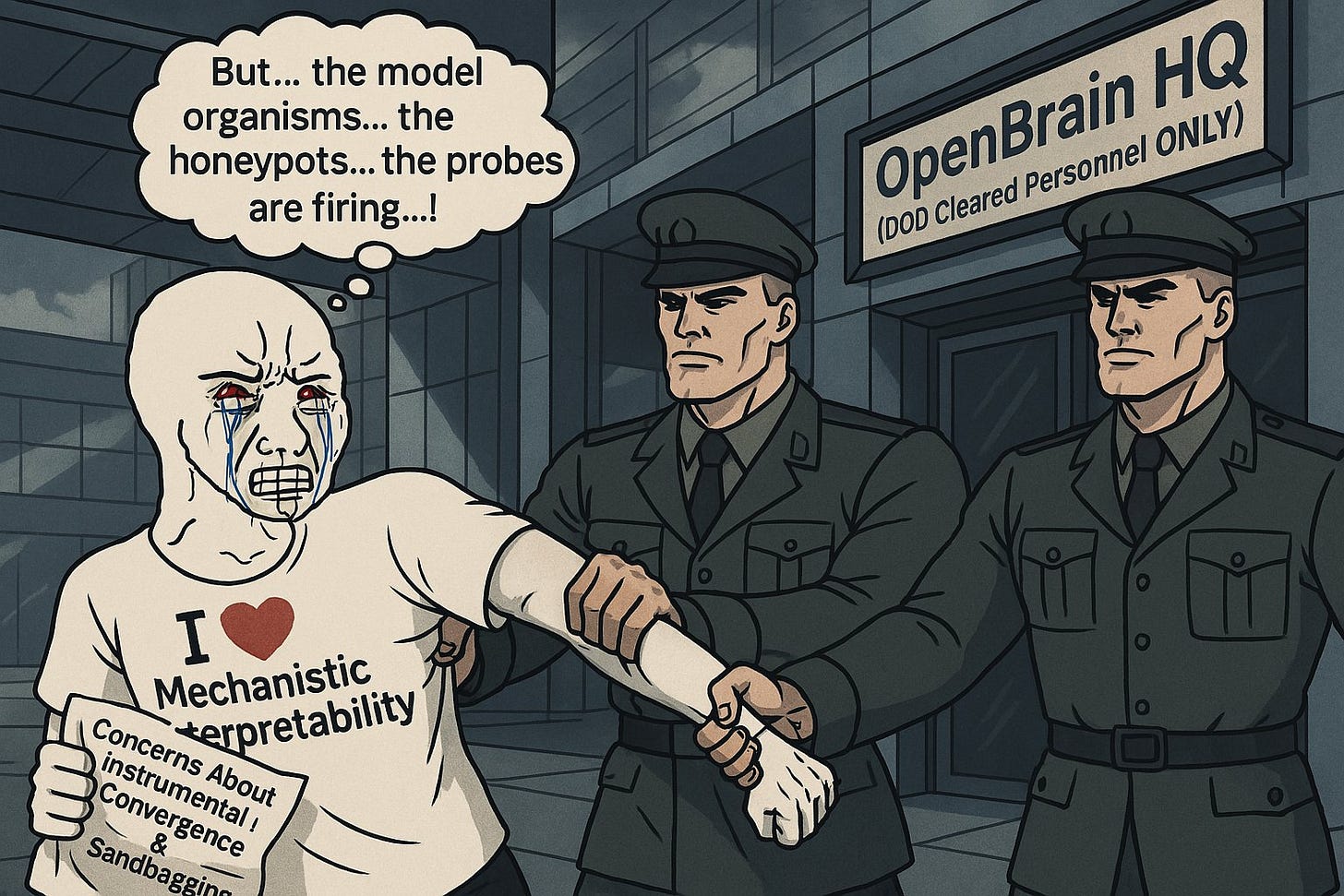

And here are some AI-assisted memes courtesy of @banteg and Gemini:

Videos

We got some good airtime - and criticism - on the AI Explained YouTube channel.

The host - a man named Philip with a dashing British accent - does a good job summarizing our work and has some thoughtful criticism. He thinks we’re underestimating the chance that China will match or overtake the US, underestimating likely public discontent with autonomous hacker AIs, and that we underestimate the disconnect between benchmark performance and economic reality (i.e. it’ll take longer for AI to be a good coder than to ace the coding benchmarks).

Many people share his skepticism of our US-beats-China prediction, so we’re working on a blog post to make the case in more detail. We’ll try to have it up by the end of this week.

We stand by our specific claim about limited public response to advanced hacker AIs - that only 4% of people will name AI in Gallup’s “most important problem” poll in 2027. Partly this is because AI’s scariest capabilities will stay secret. But partly it’s because the Gallup poll is hard to move, even for problems lots of people worry about. For example, last month, climate change got 1%. Will there be more than four times as much concern about the autonomous hacker AI, once it exists, as about climate change now? We’re skeptical.

Eli got into a longer conversation with Philip about the benchmark gap, starting with his comment here:

AI 2027 co-author here, thanks for engaging with the scenario! This is exactly the sort of disagreement that we were aiming to draw out. I may reply later with more thorough replies but for now I'll point to our timelines forecast (YT not letting me include a link but search "timelines forecast ai 2027") which does aim to take into account the gaps between benchmarks and the real world. Totally fair if you estimate that the gaps will take longer to cross or otherwise disagree with the methodology, but we are aware of these gaps and estimate their size as part of our forecast.

And Philip responded:

I did see that timelines page but of my four central concerns about the benchmark-to-real gap it ignored 2 and assumed one (no interruptions to capitalization/compute through external factors). To give one example, tacit/proprietary data. I expect superhuman performance on all public benchmarks by '27-28, but those final pockets of data (that only top researchers will know to optimize for), or that only specialist companies will have rights to, would very likely need to be accessed for Agent-2 and beyond to be superhuman in those domains. Therefore gaps in performance will remain [those companies and individuals would be intensely aware of their value-add, and ration it accordingly], whereby 90% of the performance of Agent 2-3 is incredible, but there are discordant weaknesses that need to be filled in by specialists. Other contentions, that I am discussing with METR, include the fact that 80% reliability thresholds [that you admit are 'highly uncertain'] would be shockingly unreliable for anything fully autonomous and truly transformative. Current benchmarks over-represent non-critical domains like code suggestion, high school competition math, or poems, not domains where only 99%+ reliability is acceptable (like autonomous first-strike cyberwarfare).

You can find a longer response by Eli here, some of the benchmark debate is in section 3.

Nate Silver and Maria Konnikova discussed AI 2027 on their podcast Risky Business. Mostly a summary, with the strongest criticism over whether AI could manipulate humans effectively. Nate points out that good poker players can avoid letting other players manipulate them; Maria, also a poker pro, says that’s easy in poker because you know they’re trying to screw you over, and real life - where you have to remain open to the possibility of real positive-sum alliances - is a tougher problem. Nate objects that you can often tell when people are manipulative in real life too, pointing out Gavin Newsom as an example of someone who’s obviously too slick to be entirely on the level.

We acknowledge this is fun to talk about, but we tried to avoid having our scenario revolve too heavily around AI’s manipulation abilities - we suspect it will be good at this, but our big picture forecast doesn’t change if it isn’t. See more discussion in the “superpersuasion” section here.

(also, isn’t Gavin Newsom the leading contender for the 2028 Democratic nomination? Sounds like not everyone immediately wrote him off as obviously manipulative!)

This one is a very detailed page-by-page recap of our scenario. Wes ends by saying that “I'm not going to give my thoughts on it right now because I want to know what you think about it”, and gets 575 comments. We tried to read some of them, but YouTube comment sections being what they are, we elect not to respond.

Liron Shapiro on Doom Debates has another good analysis. Rare commentary from someone who thinks we didn’t go far enough and has even more worries about near-term AI than we do. Also someone who has an even higher opinion of us than we do; he says that:

This is quite a masterpiece - if you're going read one thing this year, or even these last five years, this is a strong candidate. This in my opinion will make it into the history books, although if there are history books past 2030, maybe this means something about it was flawed and it shouldn't be in the history books.

Thank you Liron!

Alternative Scenarios

Okay, now we’re talking. Just as we’d hoped, someone used our scenario as a jumping-off point to write their own.

Yitzi writes An Optimistic 2027 Timeline - “optimistic” only if you really don’t want near-term AGI, everything else about it is pretty scary. He imagines that a host of non-AI things - stock market crash, conflict over Taiwan, public protest - intervene to slow compute scaling and delay the intelligence explosion into the next decade.

Our response is - yeah, this could happen. We’ve talked a few times about how our median timeline is longer than our modal timeline. Part of the reason is because of shocks like these. If there’s a 30% chance of a market crash, a 30% chance of a Taiwan war, a 30% chance of public unrest, etc, then each of them is less-likely-than-not to happen (and so don’t make it into our scenario), but it’s more-likely-than-not that at least one of them happens. Yitzi gives the alternate timeline where all of them happen; it’ll definitely push AI timelines back, but we’re not sure we’re ready for that much excitement.

Criticism And Commentary

Apparently not all of you agree with us about everything.

Max Harms, who is named after what will happen if people don’t take our scenario seriously enough, has Thoughts On AI 2027. He mostly likes our work, but has some qualms:

We’re too quick to posit a single leading US company (“OpenBrain”) instead of an ecosystem of racing competitors.

China won’t clearly be “falling behind” and won’t “wake up” to the need to catch up.

We’re making a mistake by expecting the Trump administration to have basically sane, predictable AI policy.

AI companies will release advanced models (our Agent-3 level) rather than keep them internally.

Maybe things will take slightly longer.

The public won’t take AI seriously even after seeing very advanced models.

AI companies will have higher public approval.

We’re too into humanoid robots.

Many of these are good points. Some very brief responses:

Most industries have a technological leader, even if they’re only a few months ahead of competitors. We think the intelligence explosion will go so fast that even a small calendar lead will translate into a big gap in capabilities.

The Trump administration has wildly varying quality of policy, but their recent pivot away from letting China have H20s suggests that they’re not totally immune from taking advice from smart people on AI in particular.

OpenAI’s approval rating is already -24, and we think this will only get worse as people start losing jobs.

Azeem Azhar’s response on Exponential View is partly paywalled, but his main concern is that Trump’s tariffs might disrupt AI enough to shake our timeline. He examines the inputs for AI data centers, and concludes that “Cumulatively, these [tariffs] could raise the total cost of a cutting-edge AI data centre by 15–17% or more.”

We don’t challenge that. But remember, size of the largest AI (in FLOPS) is growing by ~4x/year. By those standards, a shift of 15-17% either way is minimal. Maybe this will delay AGI a few months, but probably not more than that.

A full recession (whether brought on by the tariffs or something else) might be a bigger deal. But we think the basic dynamic - AI scale and technology are improving exponentially, and economic headwinds probably only provide a constant percent hit - remains valid.

Anton Leicht writes about homeostatic AI progress. He thinks that as AI becomes more important, progress will naturally slow for three reasons:

Nimble AI startups ossify into corporate service providers

Holding compute constant, more compute spent on inference (to provide all the new AI applications) means less is available for progress.

Societal backlash.

We agree these effects are real and important. Leicht predicts our response, and we agree with his prediction: in our scenario, most AI development happens behind closed doors and before AI has been “deployed” in any broad social sense beyond the level it’s deployed already. Anton counterargues:

Fast, successful deployment will be absolutely necessary to maintain the level of investment into compute, talent and energy that the current trajectory has required and will continue to require: In the world of markets and politics, too, extraordinary claims require extraordinary evidence. Right now, big tech companies and governments alike are taking a gamble on AI predictions – the former by investing, the latter by lending regulatory support. Progress toward superintelligence will require these investments to grow and grow, further and further increasing the burden of justification.

We think the intelligence explosion will only take about a year. AI companies have investors beating at their doors - Safe Superintelligence has a $30 billion valuation without ever having made a product. We think closed-door demos to investors will be enough to keep the cash flowing for a year while the intelligence explosion happens. This doesn’t necessarily mean development will be completely secret - just that it will give companies more latitude to decide what to release and what to keep quiet.

Steve Newman writes that AI 2027 Is A Bet Against Amdahl’s Law. He reads our Takeoff Forecast (thanks, Steve!) and notes that we think that without AI-accelerated AI R&D, we estimate a hundred years to full superintelligence. Then we add in the acceleration and predict it will happen in a year or so, for 250x speedup. But this requires that every part of the AI R&D process speed up approximately this much - if there’s even one bottleneck, then the whole thing falls apart. Using common sense and some specific concerns like the benchmark gap, he predicts there will, in fact, be at least one bottleneck.

We endorse Ryan Greenblatt’s response here:

I'm worried that you're missing something important because you mostly argue against large AI R&D multipliers, but you don't spend much time directly referencing compute bottlenecks in your arguments that the forecast is too aggressive.

Consider the case of doing pure math research (which we'll assume for simplicity doesn't benefit from compute at all). If we made emulated versions of the 1000 best math researchers and then we made 1 billion copies of each of them them which all ran at 1000x speed, I expect we'd get >1000x faster progress. As far as I can tell, the words in your arguments don't particularly apply less to this situation than the AI R&D situation.

Going through the object level response for each of these arguments in the case of pure math research and the correspondence to the AI R&D:

Simplified Model of AI R&D

Math: Yes, there are many tasks in math R&D, but the 1000 best math researchers could already do them or learn to do them.

AI R&D: By the time you have SAR (superhuman AI researcher), we're assuming the AIs are better than the best human researchers(!), so heterogenous tasks don't matter if you accept the premise of SAR: whatever the humans could have done, the AIs can do better. It does apply to the speed ups at superhuman coders, but I'm not sure this will make a huge difference to the bottom line (and you seem to mostly be referencing later speed ups).

Amdahl's Law

Math: The speed up is near universal because we can do whatever the humans could do.

AI R&D: Again, the SAR is strictly better than humans, so hard-to-automate activities aren't a problem. When we're talking about ~1000x speed up, the authors are imagining AIs which are much smarter than humans at everything and which are running 100x faster than humans at immense scale. So, "hard to automate tasks" is also not relevant.

All this said, compute bottlenecks could be very important here! But the bottlenecking argument must directly reference these compute bottlenecks and there has to be no way to route around this. My sense is that much better research taste and perfect implementation could make experiments with some fixed amount of compute >100x more useful. To me, this feels like the important question: how much can labor results in routing around compute bottlenecks and utilizing compute much more effectively. The naive extrapolation out of the human range makes this look quite aggressive: the median AI company employee is probably 10x worse at using compute than the best, so an AI which as superhuman as 2x the gap between median and best would naively be 100x better at using compute than the best employee. (Is the research taste ceiling plausibly this high? I currently think extrapolating out another 100x is reasonable given that we don't see things slowing down in the human range as far as we can tell.) […]

See here for the rest of Ryan’s comment and a longer back-and-forth with Steve, and some additional comments by Eli here and here.

Wei Dai asks about the branch where Agent-4 gets caught:

At this point, wouldn't Agent-4 know that it has been caught (because it knows the techniques for detecting its misalignment and can predict when it would be "caught", or can read network traffic as part of cybersecurity defense and see discussions of the "catch") and start to do something about this, instead of letting subsequent events play out without much input from its own agency? E.g. why did it allow "lock the shared memory bank" to happen without fighting back?

Daniel answers:

I think this is a good objection. I had considered it before and decided against changing the story, on the grounds that there are a few possible ways it could make sense:

Plausibly Agent-4 would have a "spikey" capabilities profile that makes it mostly good at AI R&D and not so good at e.g. corporate politics enough to ensure the outcome it wants.

Insofar as you think it would be able to use politics/persuasion to achieve the outcome it wants, well, that's what we depict in the Race ending anyway, so maybe you can think of this as an objection to the plausibility of the Slowdown ending.

Insofar as the memory bank lock decision is made by the Committee, we can hope that they do it out of sight of Agent-4 and pull the trigger before it is notified of the decision, so that it has no time to react. Hopefully they would be smart enough to do that...

Agent-4 could have tried to escape the datacenters or otherwise hack them earlier, while the discussions were ongoing and evidence was being collected, but that's a super risky strategy.

You can see the rest of the back-and-forth comment thread here.

And Mambo-12345 on Reddit (Peter Johnson on Less Wrong) criticizes our Timelines Forecast; he says it relies on a supposed superexponential trend so strong that it doesn’t respond to even huge perturbations in starting conditions; even starting at “a sloth with an abacus” would give you AGI within a few years.

We admit that the superexponential trend was implemented in a quick-and-dirty way which doesn’t let you apply it to arbitrary starting conditions, but we think it's a real possibility that accurately reflects recent trends. You can’t apply it to a sloth with an abacus, because the empirical trend wouldn't be superexponential at that point and the theoretical arguments regarding generalization of agency skills wouldn’t apply. We agree we should make it clearer how heavily the superexponential influences our conclusions, and we’ll try to emphasize that further in any later changes we make to the supplement.

Along with the time horizons forecast, we made a separate benchmark-and-gaps forecast which is much less affected by this issue and was the stronger informant of our views (we made the time horizon extrapolation version late in the process as a simpler easier to understand alternative).

You can see further discussion between Peter and Eli here and here; we’re continuing to talk to him and appreciate his engagement.

Where We Go From Here

We’re still thinking about this.

At the very least, we plan to keep blogging.

We’ll also be doing more runs of our TTX wargame. If you’re involved in AI policy and want to participate, see the “Tabletop Exercise” box at the bottom of our About page and contact us here.

We may also start working on some policy recommendations; the broad outlines shouldn’t be too surprising to anyone who’s listened to our podcasts, but we think there’s a lot of work to be done hammering out details.

Thanks so much to everyone who participated in the discussion around our scenario. And to keep up to date on our activities, subscribe here:

Legends – absolutely killer piece. I shared AI 2027 with a bunch of people because it hit exactly where my head’s been at for the past two years. Not everything will happen that fast, sure, but even 10% of it would be enough to redraw the map. And that’s exactly what’s happening.

AI feels like the internet all over again – just way deeper. It’ll be in everything. What I keep thinking about is: what does this mean for Europe? We talk a lot, regulate fast, but when it comes to actually building? That’s where it gets shaky. Yeah, we’ve got Mistral – but let’s be honest, that’s not enough. The real weight – the models, the infra, the talent – it’s mostly elsewhere.

I’d genuinely love to hear your take on Europe’s position in all this. AI isn’t optional anymore. And we can’t just be users forever. Maybe there’s hope through EU-based compute infrastructure, regulation-aligned data centers, or some unexpected angle. But the window’s closing fast.

Appreciate the piece – made a lot of people around me think.

Thanks for the shoutout :)

Keep up the great work!