Clarifying how our AI timelines forecasts have changed since AI 2027

Correcting common misunderstandings

Some recent news articles discuss updates to our AI timelines since AI 2027, most notably our new timelines and takeoff model, the AI Futures Model (see blog post announcement).1 While we’re glad to see broader discussion of the AI timelines, these articles make substantial errors in their reporting. Please don’t assume that their contents accurately represent things we’ve written or believe! This post aims to clarify our past and current views.2

The articles in question include:

The Guardian: Leading AI expert delays timeline for its possible destruction of humanity

The Independent: AI ‘could be last technology humanity ever builds’, expert warns in ‘doom timeline’

Inc: AI Expert Predicted AI Would End Humanity in 2027—Now He’s Changing His Timeline

Daily Mirror: AI expert reveals exactly how long is left until terrifying end of humanity

Our views at a high level

Important things that we believed in Apr 2025 when we published AI 2027, and still believe now:

AGI and superintelligence (ASI) will eventually be built and might be built soon, and thus we should be prepared for them to be built soon.

We are highly uncertain about when AGI and ASI will be built, we certainly cannot confidently predict a specific year.

How exactly have we changed our minds over the past 9 months? Here are the highlights.

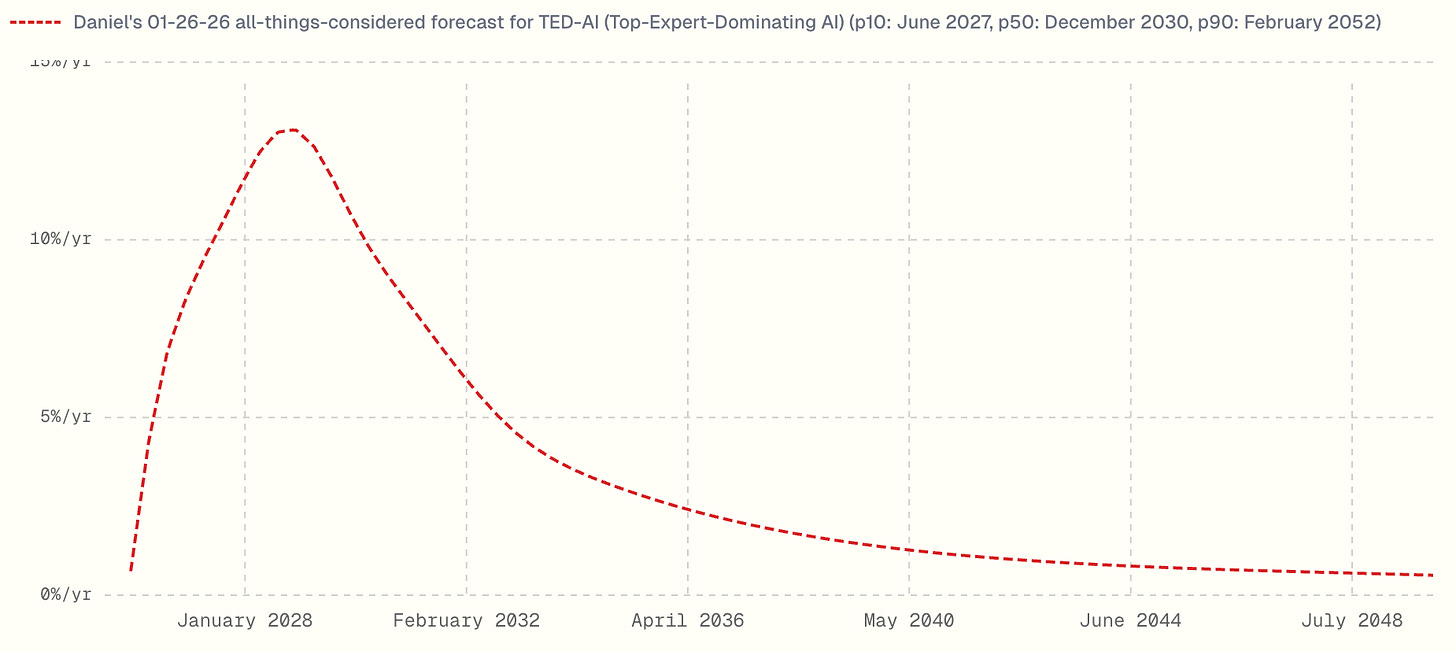

Here is Daniel’s current all-things-considered distribution for TED-AI (source):

If you’d like to see a more complete table including more metrics as well as our model’s raw outputs, see below.

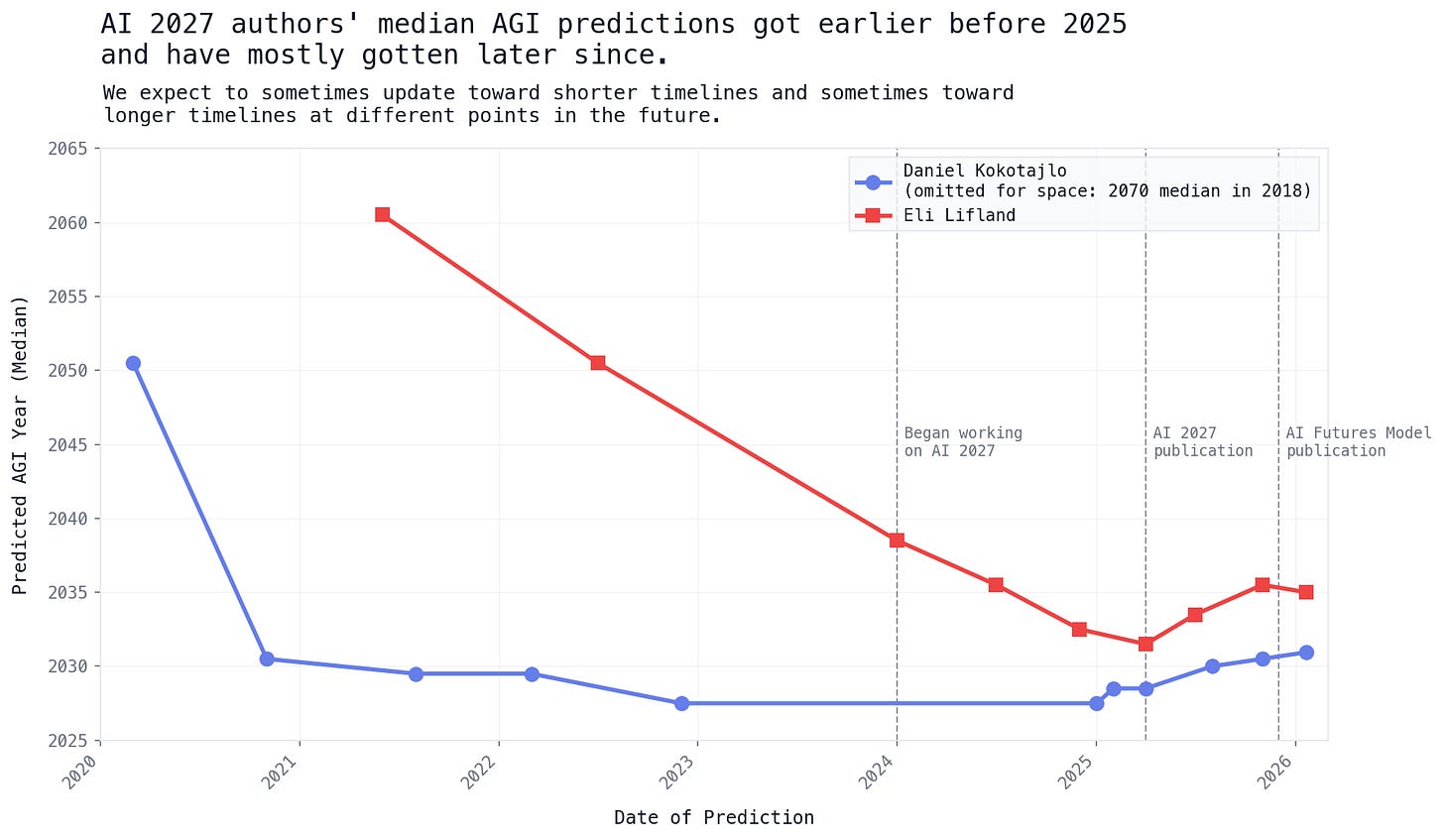

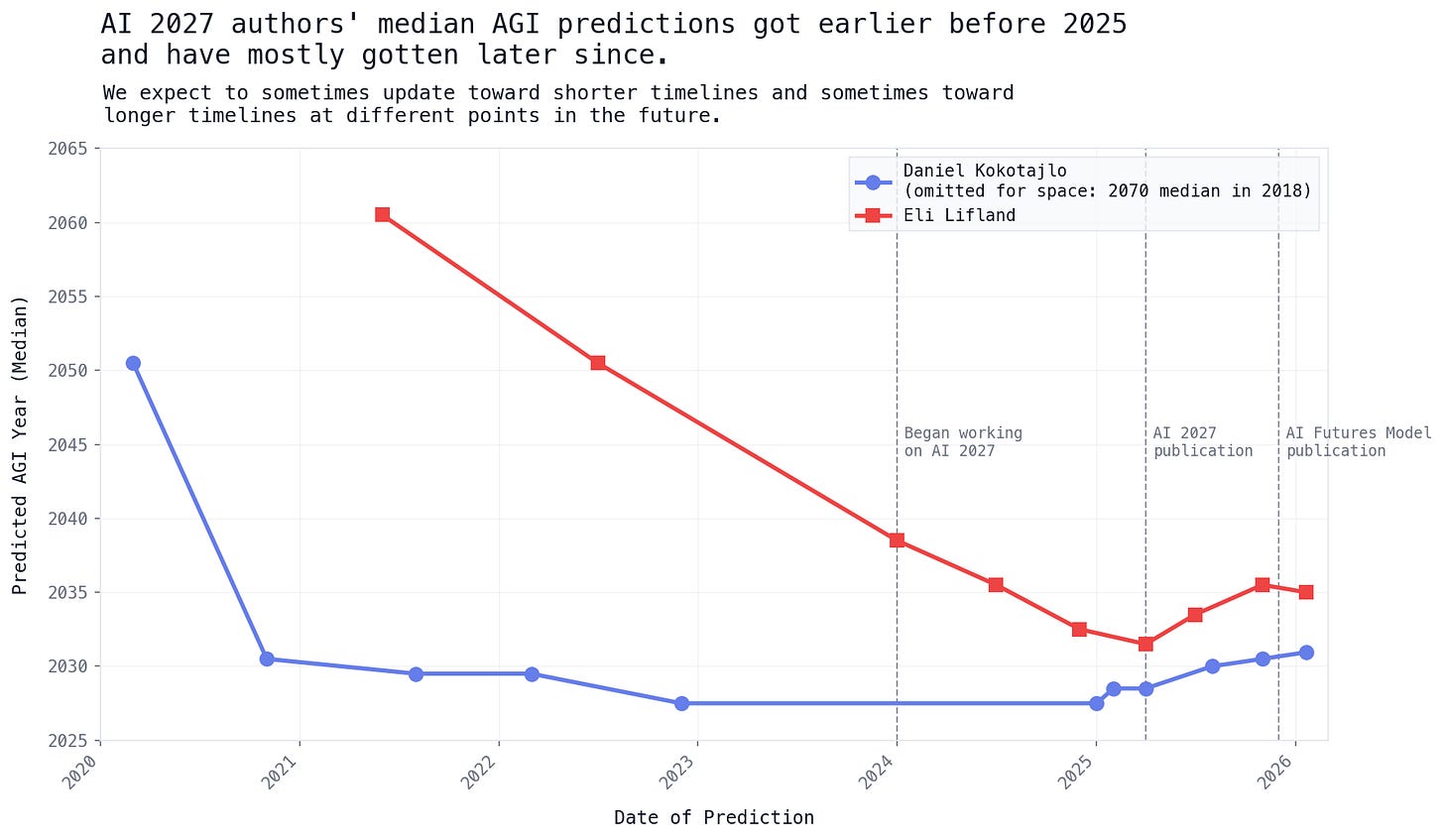

We’ve also made this graph of Daniel and Eli’s AGI medians over time, which goes further into the past:

See below for the data behind this graph.

Correcting common misunderstandings

Categorizing the misunderstandings/misrepresentations in articles covering our work:

Implying that we were confident an AI milestone (e.g. SC, AGI, or ASI) would happen in 2027 (Guardian, Inc, Daily Mirror). We’ve done our best to make it clear that it has never been the case that we were confident AGI would arrive in 2027. For example, we emphasized our uncertainty several times in AI 2027 and, to make it even more clear, we’ve recently added a paragraph explaining this to the AI 2027 foreword.

Comparing our old modal prediction to our new model’s prediction with median parameters (Guardian, Independent, WaPo, Daily Mirror), and comparing our old modal prediction to Daniel’s new median SC/AGI predictions as stated in his tweet (WaPo). This is wrong, but tricky since we didn’t report our new mode or old medians very prominently. With this blog post, we’re hoping to make this more clear.

Implying that the default displayed prediction on aifuturesmodel.com, which used Eli’s median parameters until after the articles were published, represents Daniel’s view. (Guardian, Independent, WaPo, Daily Mirror). On our original website, it said clearly in the top-left explanation that the default displayed milestones were with Eli’s parameters. Still, we’ve changed the default to use Daniel’s parameters to reduce confusion.

Detailed overview of past timelines forecasts

Forecasts since Apr 2025

Below we present a detailed overview of our Apr 2025 and recent timelines forecasts. We explain the columns and rows below the table (scroll right to see all of the cells).

The milestones in the first row are defined in the footnotes.

Explaining the summary statistics in the second row:

Modal year means the year that we think is most likely for a given milestone to arrive.

Median arrival date is the time at which there is a 50% chance that a given milestone has been achieved.

Arrival date with median parameters is the model’s output if we set all parameters to their median values. Sometimes this results in a significantly different value from the median of Monte Carlo simulations. This is not applicable to all-things-considered forecasts.

Explaining the prediction sources in the remaining rows:

All-things-considered forecasts: Our forecasts for what will happen in the world, including adjustments on top of the outputs of our timelines and takeoff models.

Apr 2025 timelines model outputs, benchmarks and gaps and Apr 2025 timelines model outputs, time horizon extension contains the outputs of 2 variants of our timelines model that we published alongside AI 2027.

Dec 2025 AI Futures Model outputs contains the outputs of our recent AI timelines and takeoff model.

2018-2026 AGI median forecasts

Below we outline the history of Daniel and my (Eli’s) forecasts for the median arrival date of AGI, starting as early as 2018. This is the summary statistic for which we have the most past data on our views, including many public statements.

Daniel

Unless otherwise specified, I assumed for the graph above that a prediction for a specific year is a median of halfway through that year (e.g. if Daniel said 2030, I assume 2030.5), given that we don’t have a record of when within that year the prediction was for.

2013-2017: Unknown. Daniel started thinking about AGI and following the field of AI around 2013. He thought AGI arriving within his lifetime was a plausible possibility, but we can’t find any records of quantitative predictions he made.

2018: 2070. On Metaculus Daniel put 30% for human-machine intelligence parity by 2040, which maybe means something like 2070 median? (note that this question may resolve before our operationalization of AGI as TED-AI, but at the time Daniel was interpreting it as something like TED-AI)

Early 2020: 2050. Daniel updated to 40% for HLMI by 2040, meaning maybe something like 2050 median.

Nov 2020: 2030. “I currently have something like 50% chance that the point of no return will happen by 2030.” (source)

Aug 2021: 2029. “When I wrote this story, my AI timelines median was something like 2029.” (source)

Early 2022: 2029. “My timelines were already fairly short (2029 median) when I joined OpenAI in early 2022, and things have gone mostly as I expected.” (source)

Dec 2022: 2027. Daniel joined OpenAI in late 2022 and his median dropped to 2027. “My overall timelines have shortened somewhat since I wrote this story… When I wrote this story, my AI timelines median was something like 2029.” (source)

Nov 2023: 2027. 2027 as “Median Estimate for when 99% of currently fully remote jobs will be automatable” (source)

Jan 2024: 2027. This is when we started the first draft of what became AI 2027.

Feb 2024: 2027. “I expect to need the money sometime in the next 3 years, because that’s about when we get to 50% chance of AGI.” (source, probability distribution)

Jan 2025: 2027. “I still have 2027 as my median year for AGI.” (source)

Feb 2025: 2028. “My AGI timelines median is now in 2028 btw, up from the 2027 it’s been at since 2022. Lots of reasons for this but the main one is that I’m convinced by the benchmarks+gaps argument Eli Lifland and Nikola Jurkovic have been developing. (But the reason I’m convinced is probably that my intuitions have been shaped by events like the pretraining slowdown)” (source)

Apr 2025: 2028. “between the beginning of the project last summer and the present, Daniel’s median for the intelligence explosion shifted from 2027 to 2028” (source)

Aug 2025: EOY 2029 (2030.0). “Had a good conversation with @RyanPGreenblatt yesterday about AGI timelines. I recommend and directionally agree with his take here; my bottom-line numbers are somewhat different (median ~EOY 2029) as he describes in a footnote.” (source)

Nov 2025: 2030. “Yep! Things seem to be going somewhat slower than the AI 2027 scenario. Our timelines were longer than 2027 when we published and now they are a bit longer still; ‘around 2030, lots of uncertainty though’ is what I say these days.” (source)

Jan 2026: Dec 2030 (2030.95). (source)

Eli

Unless otherwise specified, I assumed for the graph above that a prediction for a specific year is a median of halfway through that year (e.g. if I said 2035, I assume 2035.5), given that we don’t have a record of when within that year the prediction was for.

2018-2020: Unknown. I began thinking about AGI in 2018, but I didn’t spend large amounts of time on it. I predicted median 2041 for weakly general AI on Metaculus in 2020, not sure what I thought for AGI but probably later.

2021: 2060. ‘Before my TAI timelines were roughly similar to Holden’s here: “more than a 10% chance we’ll see transformative AI within 15 years (by 2036); a ~50% chance we’ll see it within 40 years (by 2060); and a ~2/3 chance we’ll see it this century (by 2100)”.’ (source). I was generally applying a heuristic that people into AI and AI safety are biased toward / selected for short timelines.

Jul 2022: 2050. “I (and the crowd) badly underestimated pro.gress on MATH and MMLU… I’m now at ~20% by 2036; my median is now ~2050 though still with a fat right tail.” (source)

Jan 2024: 2038. I reported a median of 2038 in our scenario workshop survey. I forget exactly why I updated toward shorter timelines, probably faster progress than expected e.g. GPT-4 and perhaps further digesting Ajeya’s update.

Mid-2024: 2035. I forget why I updated, I think it was at least in part due to spending a bunch of time around people with shorter timelines.

Dec 2024: 2032. Updated on early versions of the timelines model predicting shorter timelines than I expected. Also, RE-Bench scores were higher than I would have guessed.

Apr 2025: 2031. Updated based on the two variants of the AI 2027 timelines model giving 2027 and 2028 superhuman coder (SC) medians. My SC median was 2030, higher than the within-model median because I placed some weight on the model being confused, a poor framework, missing factors, etc. I also gave some weight to other heuristics and alternative models, which seemed to overall point in the direction of longer timelines. I shifted my median back by a year from SC to get one for TED-AI/AGI.

Jul 2025: 2033. Updated based on corrections to our timelines model and downlift.

Nov 2025: 2035. Updated based on the AI Futures Model’s intermediate results. (source)

Jan 2026: Jan 2035 (~2035.0). For Automated Coder (AC), my all-things-considered median is about 1.5 years later than the model’s output. For TED-AI, my all-things-considered median is instead 1.5 earlier than the model’s output, because I believe the model’s takeoff is too slow, due to modeling neither hardware R&D automation nor broad economic automation. See my forecast here. My justification for pushing back the AC date is in the first “Eli’s notes on their all-things-considered forecast” expandable, and the justification for adjusting takeoff to be faster is in the second.

In this post we’re mostly discussing timelines to AI milestones, but we also think “takeoff” from something like AGI or full coding automation to vastly superhuman AIs (e.g. ASI) is at least as important to forecast, despite getting far less attention. We focus on timelines because that’s what the articles have focused on.

From feedback, we also think that others besides the authors of these articles have had trouble understanding how our views and our model’s outputs have changed since AI 2027, giving us further motivation to make this post.

Please explain how next token prediction and mirage reasoning is at all related to or even on the same trajectory as superintelligence. You are just helping weird CEOs like Altman and Amodei secure more funding from the Pentagon under the pretension that their meandering toys are somehow powerful and capable enough to be worthy of sponsoring with American tax dollars. This whole narrative is a scam. Look at the reality: no ROI, out of control circle jerk econony, and they can't even get it to work properly in a fucking stuffed bear! This is all so ludicrous and embarassing.