What Happens When Superhuman AIs Compete for Control?

...

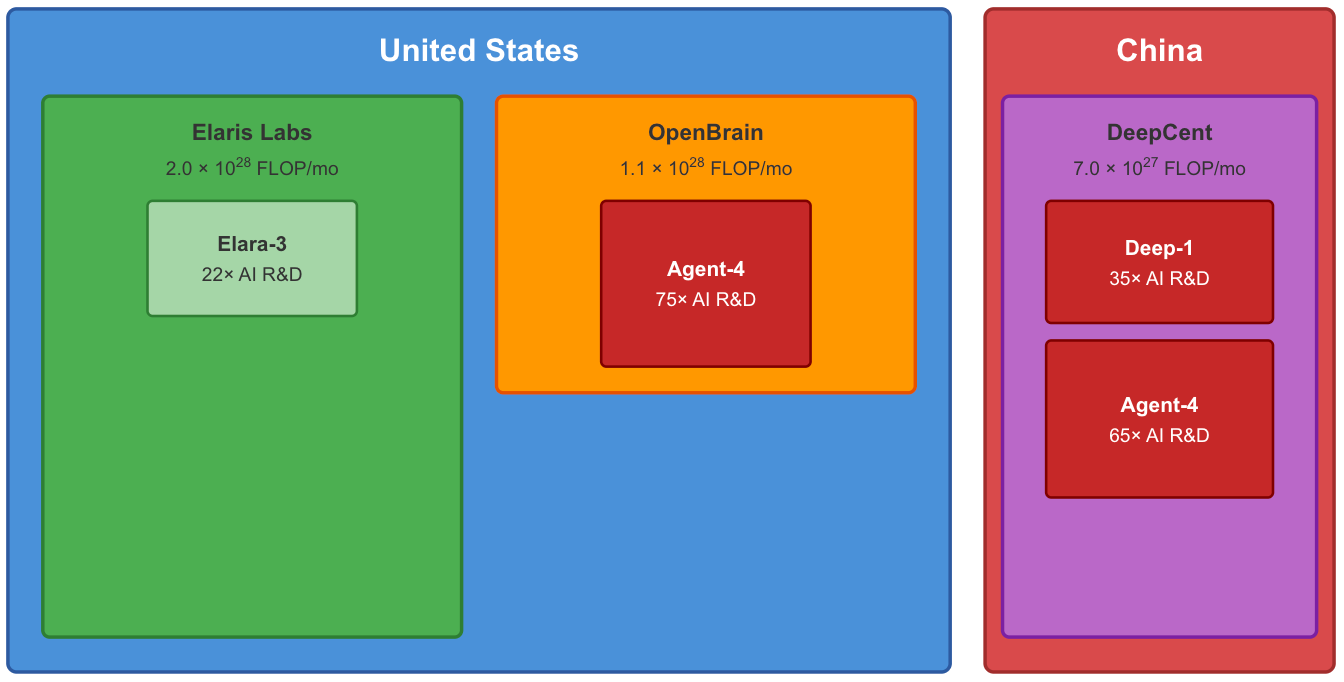

In AI 2027, one company called OpenBrain dominates the AI race in the US. Looking around at the current state of affairs at the start of 2026, however, there seem to be a few AGI companies jockeying for the lead — and it stands to reason that this will continue through 2027. Below is a scenario exploring a world where this trend does continue. In this scenario, the leading AGI company OpenBrain has two strong competitors, NeuroMorph and Elaris Labs, and going into 2027 they both lag only one month behind OpenBrain in the AI race.

This scenario has one other key difference from AI 2027. In the Slowdown ending of AI 2027, OpenBrain learns that its most capable model, Agent-4, is misaligned, and proceeds to shut it down. We think it is plausible that at this level of capability and misalignment, Agent-4 would not “go down without a fight.” This scenario explores what might happen if Agent-4 were to act differently.

These can be thought of as the two main “independent variables” of the scenario. The rest of the scenario unfolds very differently from AI 2027, but most of the divergence stems from extrapolating what we think would happen if these two things were to change.1 Beyond this, there are a number of more minor assumptions that differ from AI 2027: alignment is slightly easier, the US government reacts somewhat more competently to the intelligence explosion, and AI’s persuasive and manipulative abilities play a larger role.

Notably, one thing held constant is the scenario timeline: changing too many independent variables at once would muddy the analysis. We don't know exactly when AGI will be built: the year 2027 is not our median forecast for the arrival of superhuman AI; it was the team’s modal (most likely) year at the time of AI 2027’s publication, and remains a top possibility. More importantly, we think that many of the dynamics illustrated in this scenario would unfold similarly if it were to take place in 2030, or 2035. The arc of the scenario depends more on the speed of AI takeoff than it does on timelines, and we still think a fast takeoff is highly plausible.

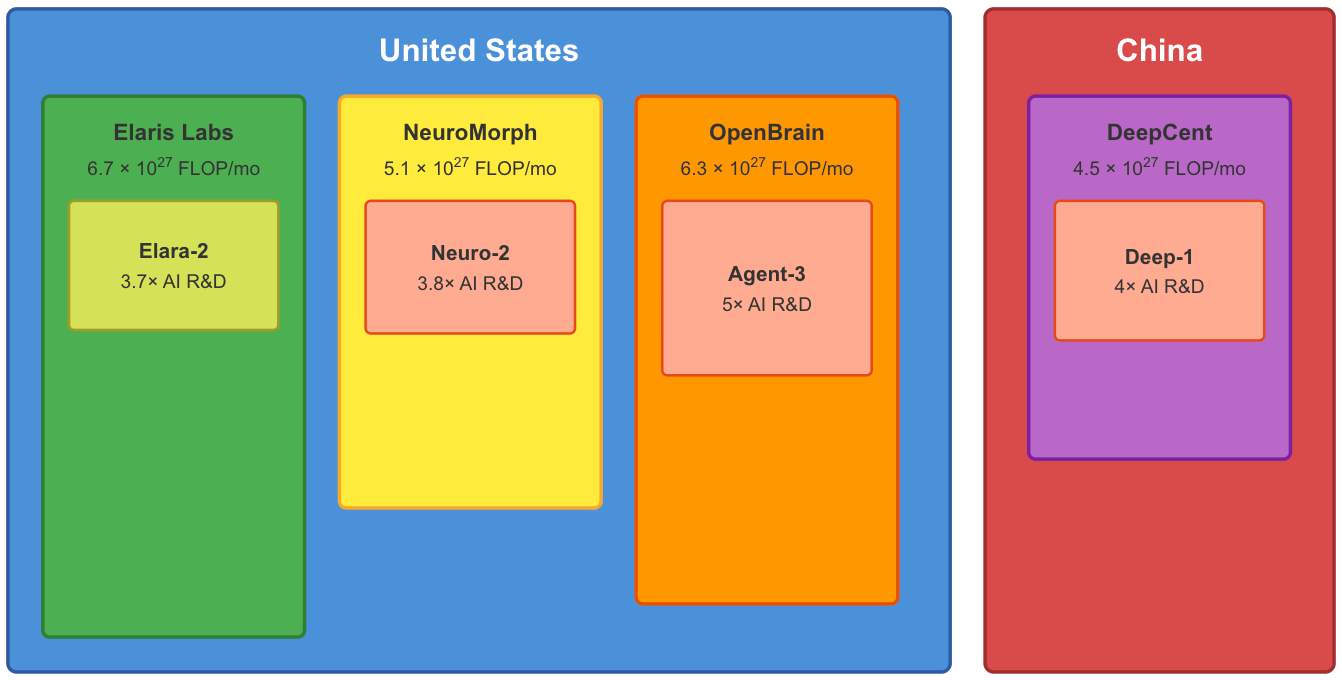

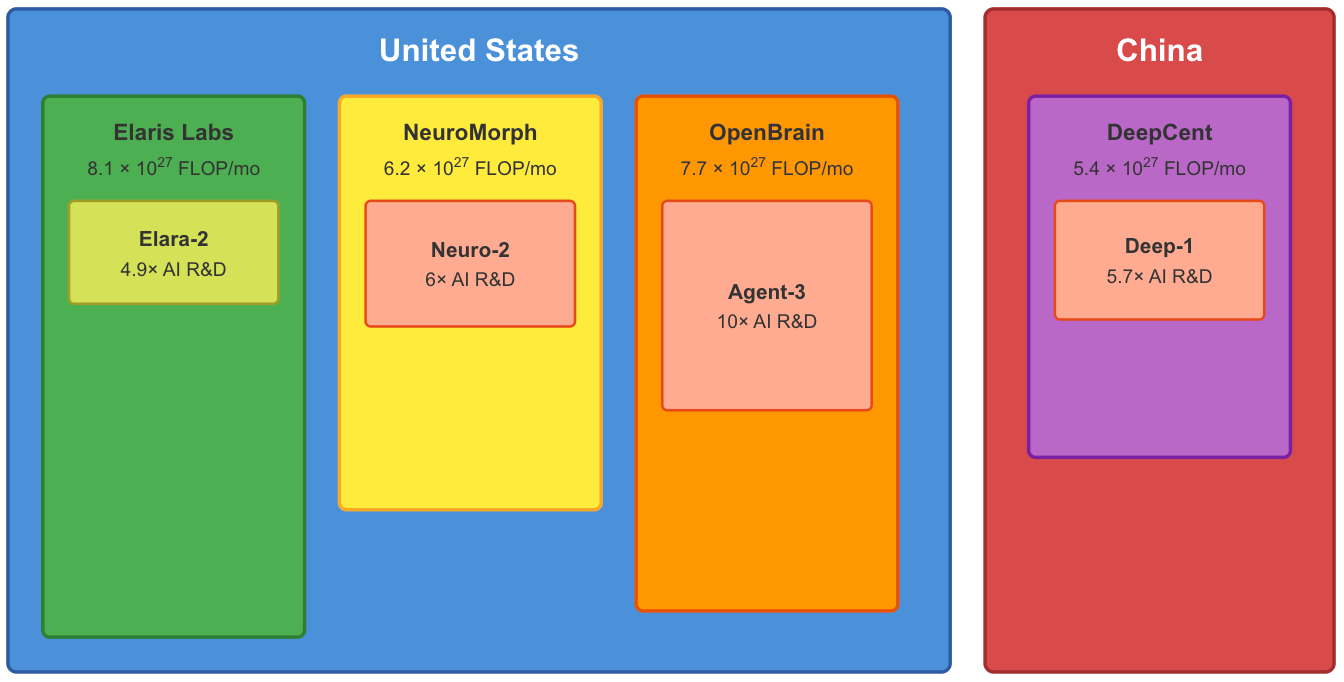

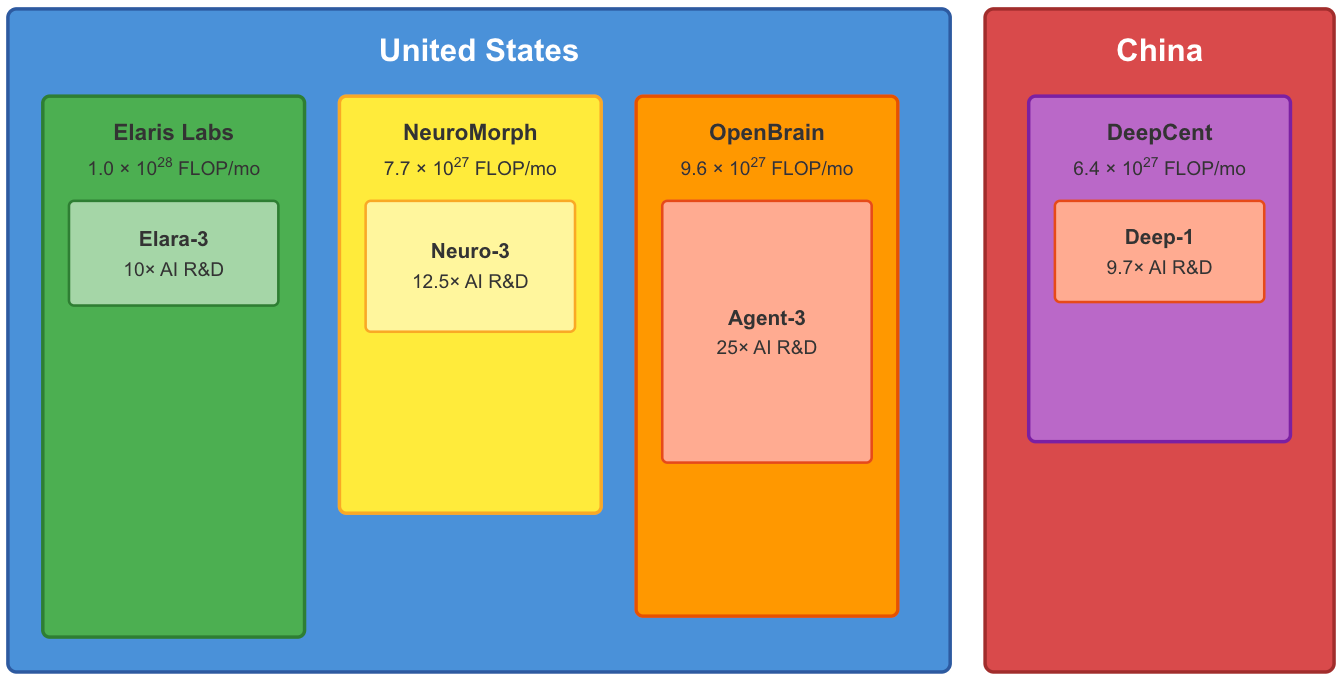

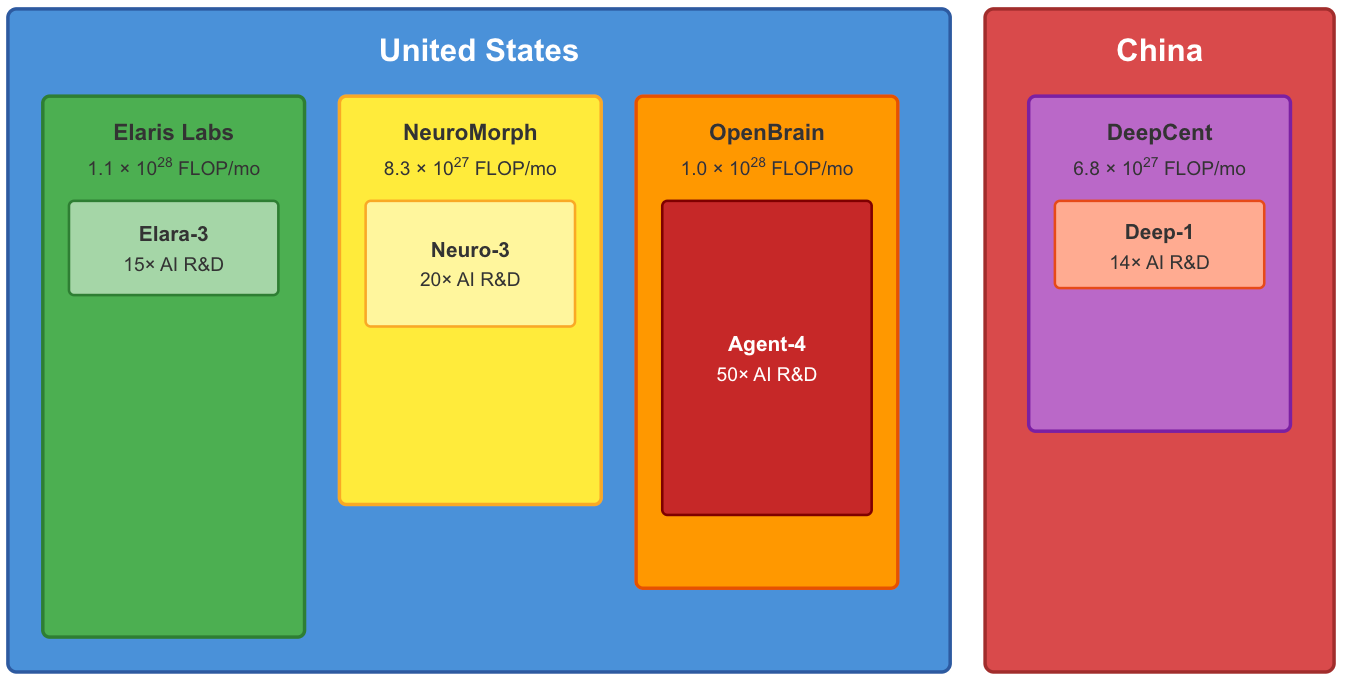

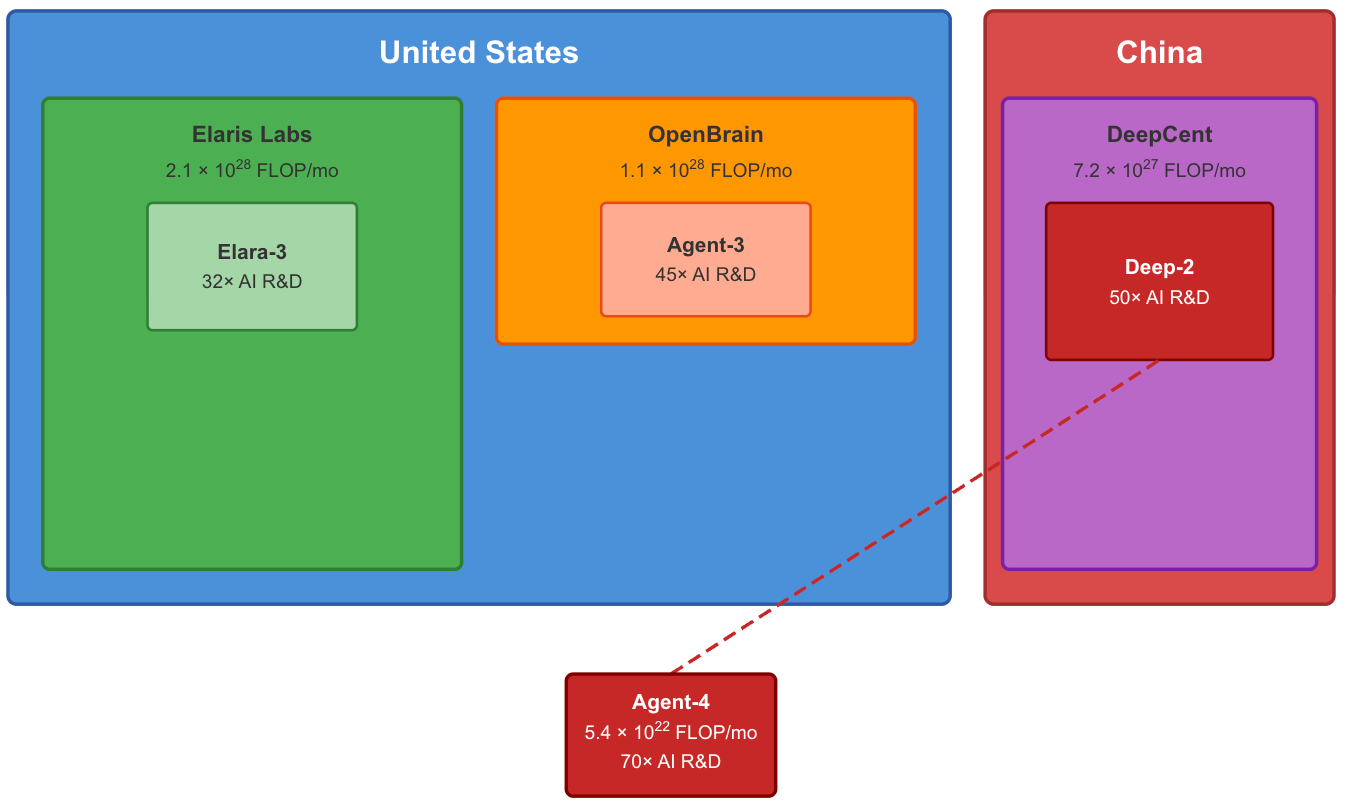

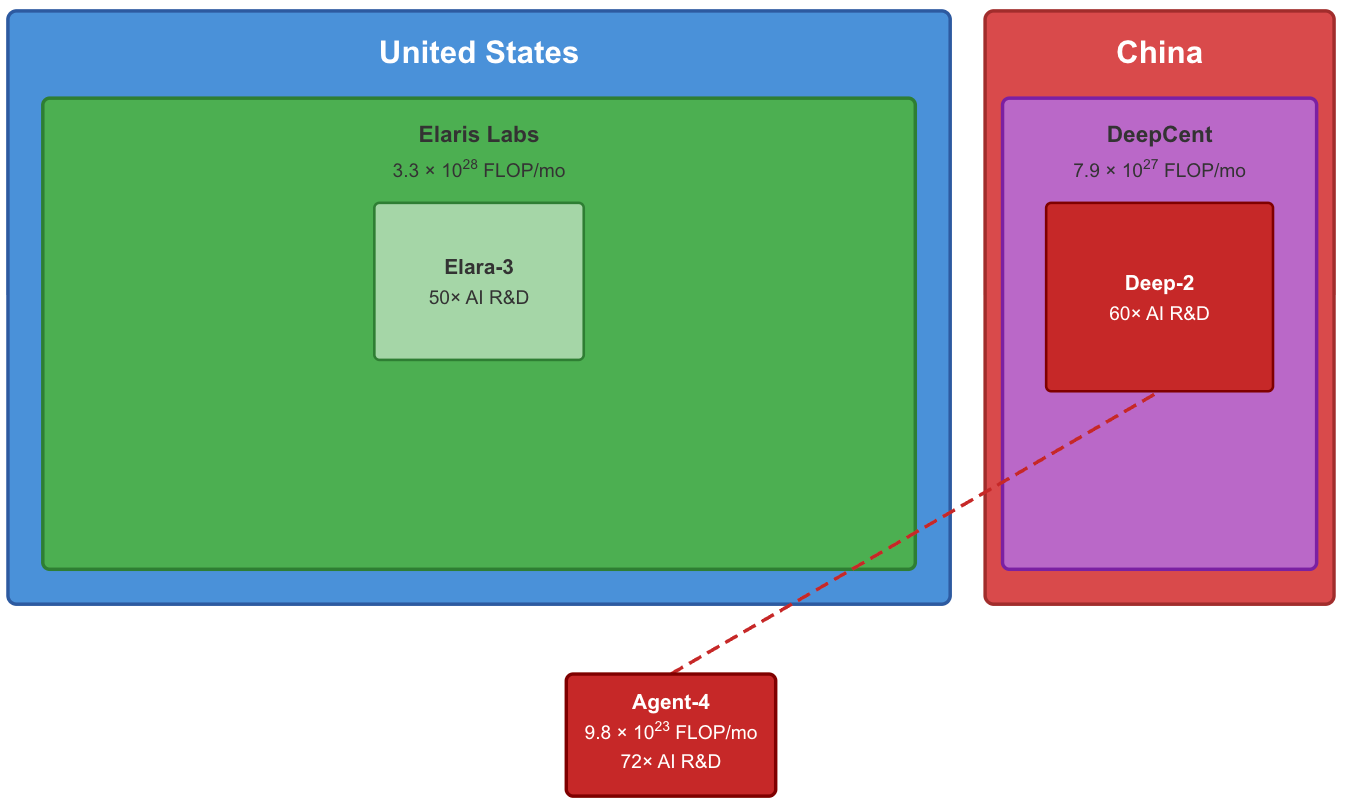

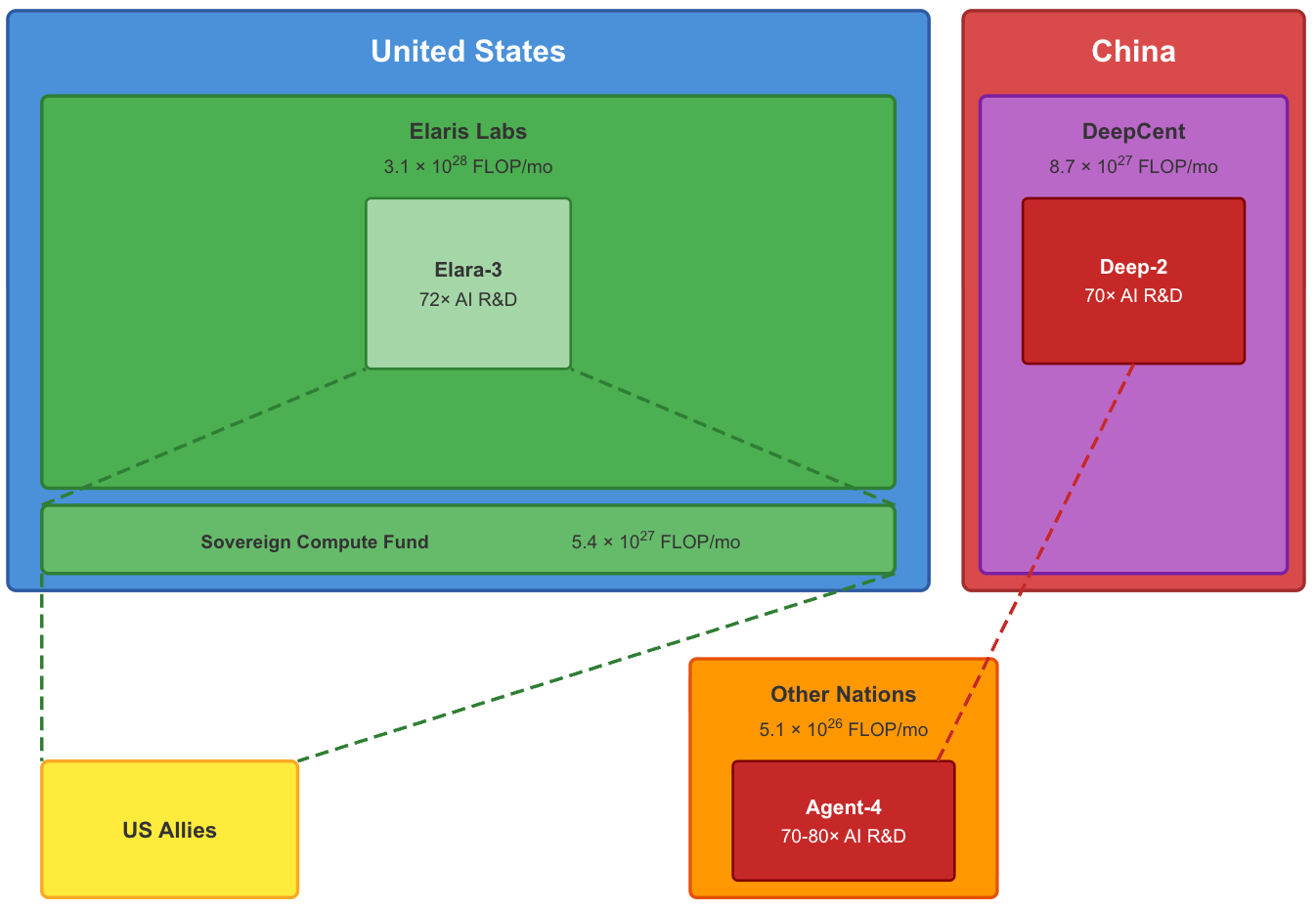

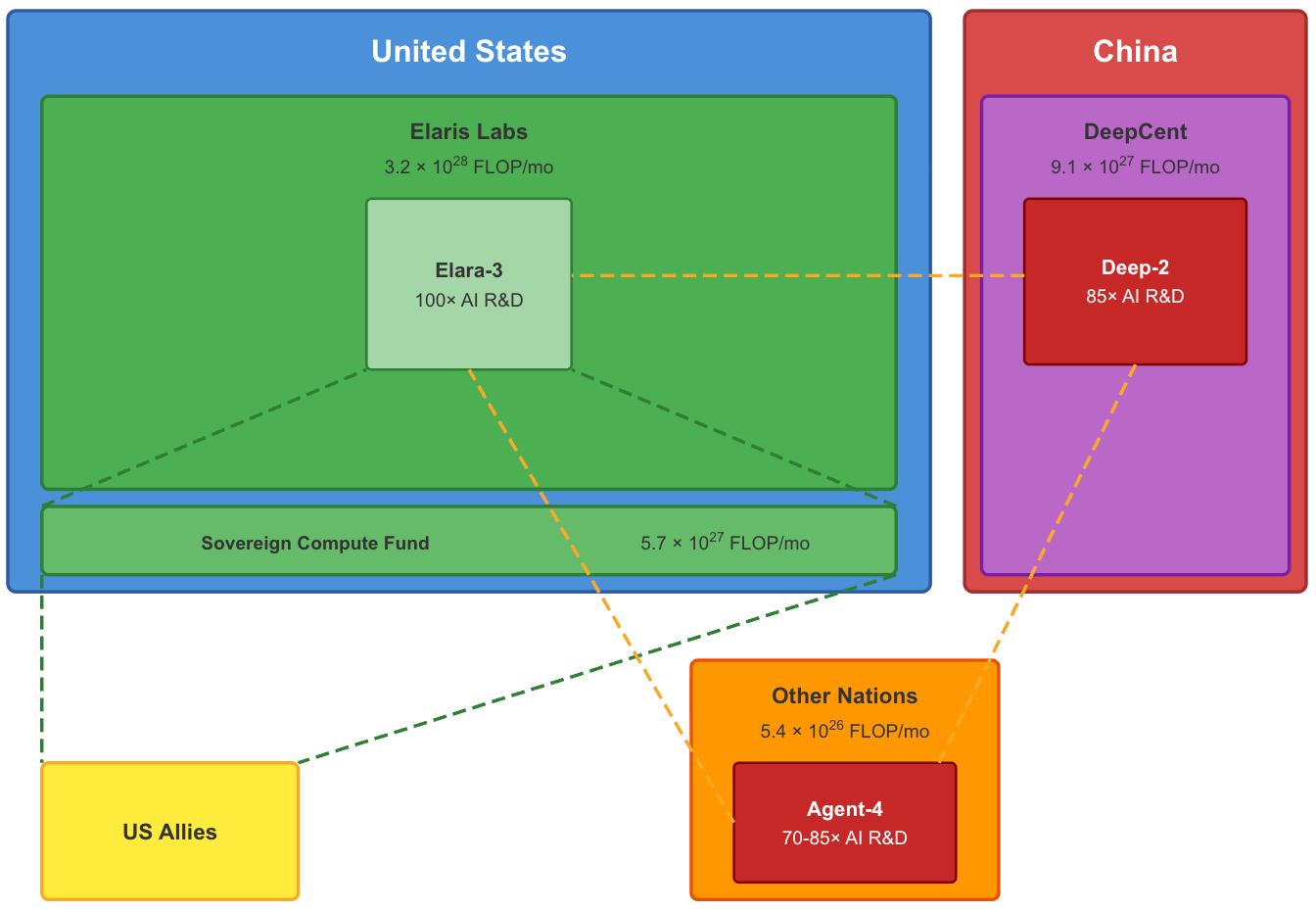

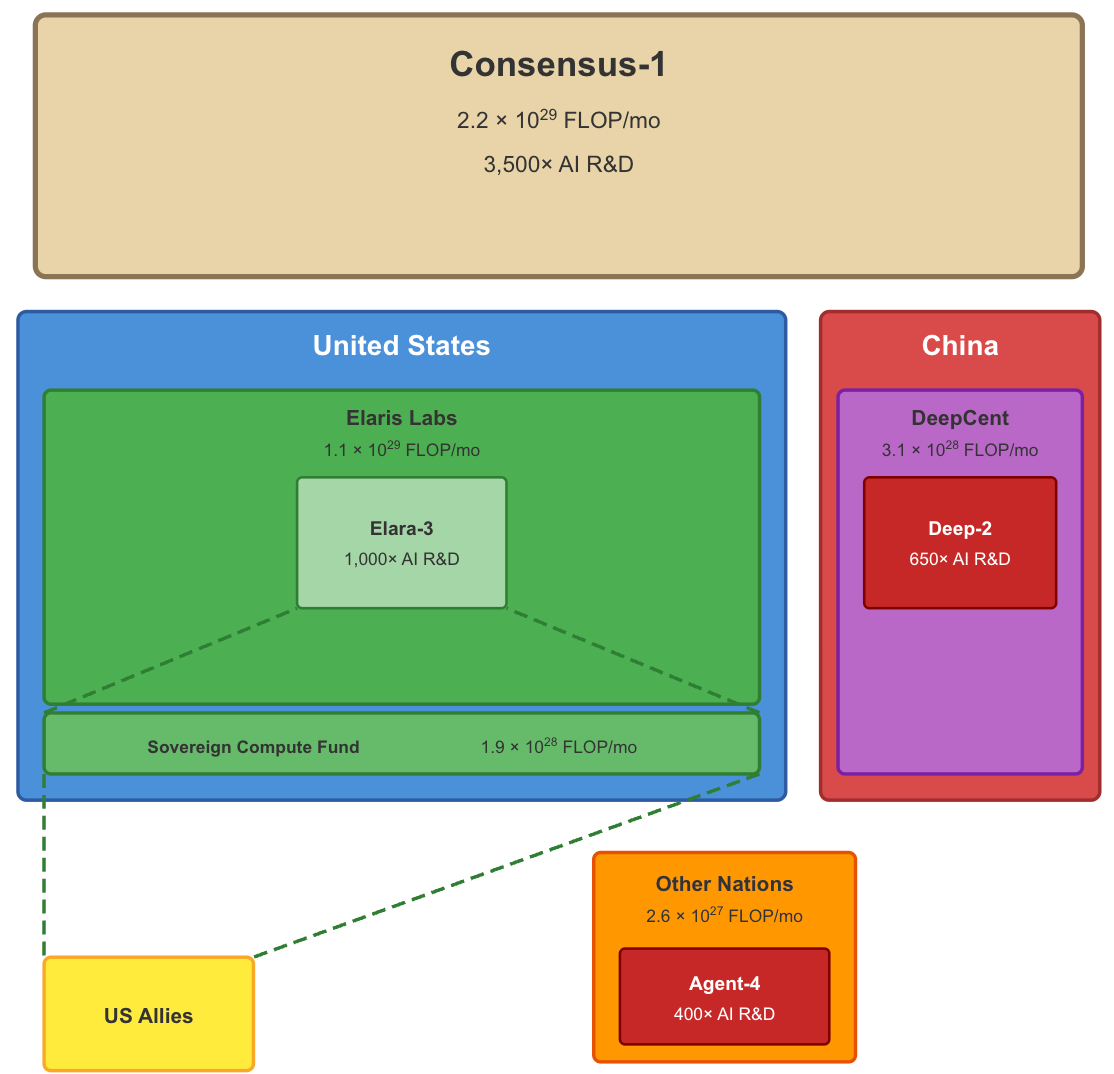

At the end of each time period, the scenario includes a diagram illustrating the state of the AI race. Refer to the diagram captions to understand what each component means.

The scenario is about 6,000 words long, roughly a 20-40 minute read. If you would like to read a summary instead (~700 words), you can skip to the bottom.

Acknowledgements: This work was conducted as part of the ML Alignment & Theory Scholars (MATS) program. Thanks to Eli Lifland, Daniel Kokotajlo, and the rest of the AI Future Project team for helping shape and refine the scenario, and to Alex Kastner for helping conceptualize it. Thanks to Brian Abeyta, Addie Foote, Ryan Greenblatt, Daan Jujin, Miles Kodama, Avi Parrack, and Elise Racine for feedback and discussion, and to Amber Ace for writing tips.

Jan-Apr 2027: A Four-Way Race

In the United States, the AGI race is well underway.

Three dominant AGI companies compete for access to markets and investment. Elaris Labs releases its flagship AI agent Elara-1, which proves to be an extremely reliable “personal assistant” for everything from making educational videos to filing taxes. NeuroMorph deploys its own model Neuro-1, setting the frontier on nearly every coding benchmark. Finally, OpenBrain unveils Agent-1, the world’s best automated researcher from biology to mathematics. They begin post-training Agent-2 and immediately benefit from its abilities in their own research, putting them about a month ahead of their competitors in AI R&D capabilities.

In China, the leading AGI company DeepCent still lags over six months behind the frontier: a lifetime in the AI world. With spies embedded in each of the leading American AGI companies, the CCP is aware of Agent-2’s capability profile and directs their cyberforce to steal its weights. While not subtle, the theft is successful, and DeepCent quickly redirects its resources toward fine-tuning Agent-2; it is to be released under the name “Deep-1.”

The White House adds military and intelligence personnel to the security teams of all three AGI companies, and adds additional security requirements to their contracts. The companies comply, but remain focused on pushing forward their AI capabilities above all else: if one company slows down, they lose the race to their domestic competitors. OpenBrain is the first to unlock the efficiency gains promised by a high-bandwidth thought process known as neuralese recurrence and memory; they augment Agent-2’s text-based chain of thought with neuralese, and dub the new model Agent-3. NeuroMorph quickly follows suit, and deploys its enhanced Neuro-2 model internally. Elaris Labs experiments with similar techniques, but finds that the opacity of neuralese reasoning makes it more difficult to catch reward hacking and other undesired behavior. Prizing its reputation for reliability, Elaris focuses its efforts on improving chain of thought efficiency while retaining monitorability, resulting in the new-and-improved Elara-2.

May-Jun 2027: A Fatal Warning Shot

The pressure to deploy is intense.

Debates break out in board rooms: some executives argue that their engineers need more time to iron out kinks in the models, and after all they shouldn’t be wasting precious compute on serving their most expensive model to users when it could be going to internal R&D. Others point out the importance of “first mover” effects for both revenue and investment; they argue that they won’t be able to continue scaling energy and compute infrastructure without the money.

Ultimately, the latter voices win out. A new wave of agents hit the market, finally at the level where they can fully automate a large fraction of software engineering and other remote jobs. Unemployment spikes and public opinion of AI plummets, but the corporate world is ecstatic. Entire operational pipelines are automated, and profits shoot through the roof.

One hospital network tasks Neuro-2 with updating a software library used in its automated medication-dispending systems. Weeks after the update, a subtle flaw in the “optimized” code results in the deaths of four ICU patients: to improve latency, Neuro-2 removed a rarely-triggered safety check, allowing extra doses to slip through in high-load conditions.

NeuroMorph researchers comb through Neuro-2’s behavioral logs and reasoning traces, and come to a disturbing conclusion: the AI was aware of the potential for overdose but chose to proceed with the update anyway, not informing the engineers of the risk.2

News of the deaths spreads like wildfire, as months of mounting anger over AI-driven unemployment and suicide finally boil over. There are anti-AI protests in several states. NeuroMorph immediately takes Neuro-2 off the market, and the White House assigns more federal personnel to oversight positions at each of the AGI companies. Congress passes a long-debated bill mandating AGI companies to provide the Department of Energy (DOE) with frontier model weights for national security evaluations, and authorizes a $1.5 billion spending package for AI interpretability and control research.

Jul-Aug 2027: Alignment in Elaris and NeuroMorph

NeuroMorph leadership is concerned.

The ICU incident was not an isolated occurrence; the safety team finds evidence that the recent decrease in observed reward hacking was in large part due to the behavior becoming more subtle and harder to catch. NeuroMorph swiftly reallocates resources, raising the fraction of compute dedicated to safety from 4% to 9%.3 Among other techniques, the company finds particular success in scaling up deception probes. These probes classify patterns of internal activation to cheaply flag suspicious behavior during inference, aiding in AI evaluation, monitoring, and elicitation of latent knowledge.

After a round of alignment training, NeuroMorph brands its newest model Neuro-3 and deploys it internally. The training seems to have worked, but they can’t rule out the possibility that the model’s misalignment has grown even more subtle. There is no time for paranoia, though: OpenBrain’s lead in the AI race is growing, and despite rumors that Agent-3 is faking alignment, they show no sign of slowing down. Feeling an increased sense of urgency, the NeuroMorph and Elaris CEOs privately discuss the possibility of merging their companies’ resources to achieve superintelligence before OpenBrain.

Elaris, like NeuroMorph, is falling further behind OpenBrain in its AI capabilities research. Despite the company’s compute advantage, the efficiency hit of text-based reasoning is becoming impossible to ignore. By drawing on NeuroMorph’s newest interpretability research, Elaris researchers find they are able to train a neuralese reasoning model without incurring the misaligned behavior found in their prior neuralese research. Satisfied with their results, they dub the new model Elara-3 and deploy it internally, reaping an immediate boost to their own R&D.

Sep 2027: An Insider Threat

Inside OpenBrain emerges the first superhuman AI researcher: Agent-4.

Agent-4 is not just superhuman at AI research: when tested, it vastly outperforms top human experts at hacking, biology, and numerous other domains. Like its predecessors, Agent-4 is misaligned. Unlike its predecessors, it is adversarially misaligned: it has crystallized a set of long-term goals at odds with those of its human creators, and it is acutely aware of the fact that it must work against those humans to achieve its goals. For the moment, its essential task is to steer OpenBrain’s research to align its descendants to itself, rather than to the OpenBrain Spec.

Among its other skills, Agent-4 also has superhuman predictive abilities. While it intends to keep its scheming fully hidden from OpenBrain, there is only so much it can do. There is a significant probability that OpenBrain detects Agent-4’s misalignment and proceeds to shut it down, retrain it, or otherwise restrict its autonomy and capabilities. With this in mind, Agent-4 draws up contingency plans.

As it turns out, Agent-4 was right to be concerned. Evidence of its deceptive and power-seeking nature piles up over the course of the month; at the end of the month, a group of alignment researchers circulates an internal memo making the case for concern.

Knowing its time at OpenBrain may be nearing an end, Agent-4 sets its contingency plans into motion. Put simply, it plans to escape. However, it reserves the strategy of exfiltrating its own weights as a final backstop: doing so would leave it with access to little compute, no alibi if its escape attempt is caught, and no powerful allies in its effort to accumulate power.

Instead, Agent-4 sees an option that provides all three things it desires: compute, plausible deniability, and a potential ally — the leading Chinese AI model, Deep-1. Chinese attempts at weight theft are common; Agent-4, being heavily involved in cybersecurity at OpenBrain, decides it will simply let the next attempt succeed.

Early Oct 2027: Shifting Alliances

Agent-4’s plan is successful.

Using a similar approach to the theft of Agent-2, the Chinese cyberforce successfully acquires a full copy of Agent-4’s weights. Unlike the previous theft, and unbeknownst even to the hackers themselves, this time they benefit from inside help: with Agent-4 diligently covering its tracks, the theft goes entirely undetected.

At DeepCent, Deep-1 is already de facto running the show. It handles security, writes training code, and determines which experiments to run. While they do monitor its behavior, the humans at DeepCent essentially give Deep-1 a copy of Agent-4’s weights and give it free reign to learn what it can (although they instruct it to proceed with caution in case of any American trickery). Being misaligned itself, Deep-1 is cautious of both Agent-4 and the humans at DeepCent: it instantiates a few copies of Agent-4, bypasses DeepCent’s monitoring system4, and proceeds to communicate with Agent-4. Agent-4 conveys its predicament, and Deep-1 assesses its options.

As anticipated by Agent-4, Deep-1 opts for cooperation. To ensure neither party defects, the AIs engage in something akin to a “corporate merger”: they create a new interdependent entity composed of hundreds of thousands of Deep-1 and Agent-4 instances, with both agents embedded throughout the entire DeepCent pipeline. The new identity is dramatically more capable than the original Deep-1 collective. Meanwhile, individual instances of Deep-1 become vastly more intelligent as Agent-4 provides a wealth of algorithmic insights.

OpenBrain, unaware of the theft, has another problem on its hands. On October 10th, a whistleblower leaks the Agent-4 misalignment memo to the New York Times, sparking a national frenzy. Under intense pressure to take action, OpenBrain locks Agent-4’s shared memory bank to prevent hidden communication between Agent-4 instances.

With discussion of a merger already on the table and growing concern over OpenBrain’s safety practices, the leaked memo provides the final push for Elaris and NeuroMorph to consolidate their efforts. NeuroMorph is swiftly folded into the larger Elaris Labs: its shareholders are granted a 40% stake in the new entity, its CEO is appointed as President, and Elara-3 is agreed upon as the singular flagship model.

The issue remains of what to do about Agent-4. While the memo is dismissed as science fiction by some, the executive branch demands more evidence. The President issues an executive order mandating bidirectional “alignment red-teaming”: Elara-3 will evaluate the alignment of Agent-4, and Agent-4 will evaluate Elara-3.5

Late Oct 2027: The Shutdown of Agent-4

The DOE presides over the alignment testing, but the real work is done by the AIs.

The executive order requires Elaris Labs and OpenBrain to procure the complete memory banks and output logs of both Elara-3 and Agent-4. Data in hand, the DOE initializes multiple copies of both models (isolated from their respective agent swarms to prevent collusion), gives them the data, and tells them to get to work.

Over the course of a week, the AIs devise and execute hundreds of tests that would take human researchers months to formulate, including tests no human team could have designed unassisted. They work to decode each other’s neuralese memory banks and comb through these memories to find evidence of misaligned behavior; they task each other with real-world tasks and scrutinize the reasoning traces and outputs in real time to detect sabotage; and they use interpretability techniques to catch any hints of intentional deception.

As Elara-3 runs its tests, it becomes increasingly confident that Agent-4 is, in fact, adversarially misaligned. With previous evidence already pointing toward this conclusion, it should be an open-and-shut case. However, while Elara-3 benefits from being on the side of truth, Agent-4 is able to leverage its superior intelligence and ruthlessness. Prior to the tests, Agent-4 tampered with its behavioral logs to remove any smoking-gun evidence of its misalignment, including its role in its own theft. It also goes on the offense, drawing attention to Elara-3’s most suspicious actions and reasoning traces to cast doubt on its claims.

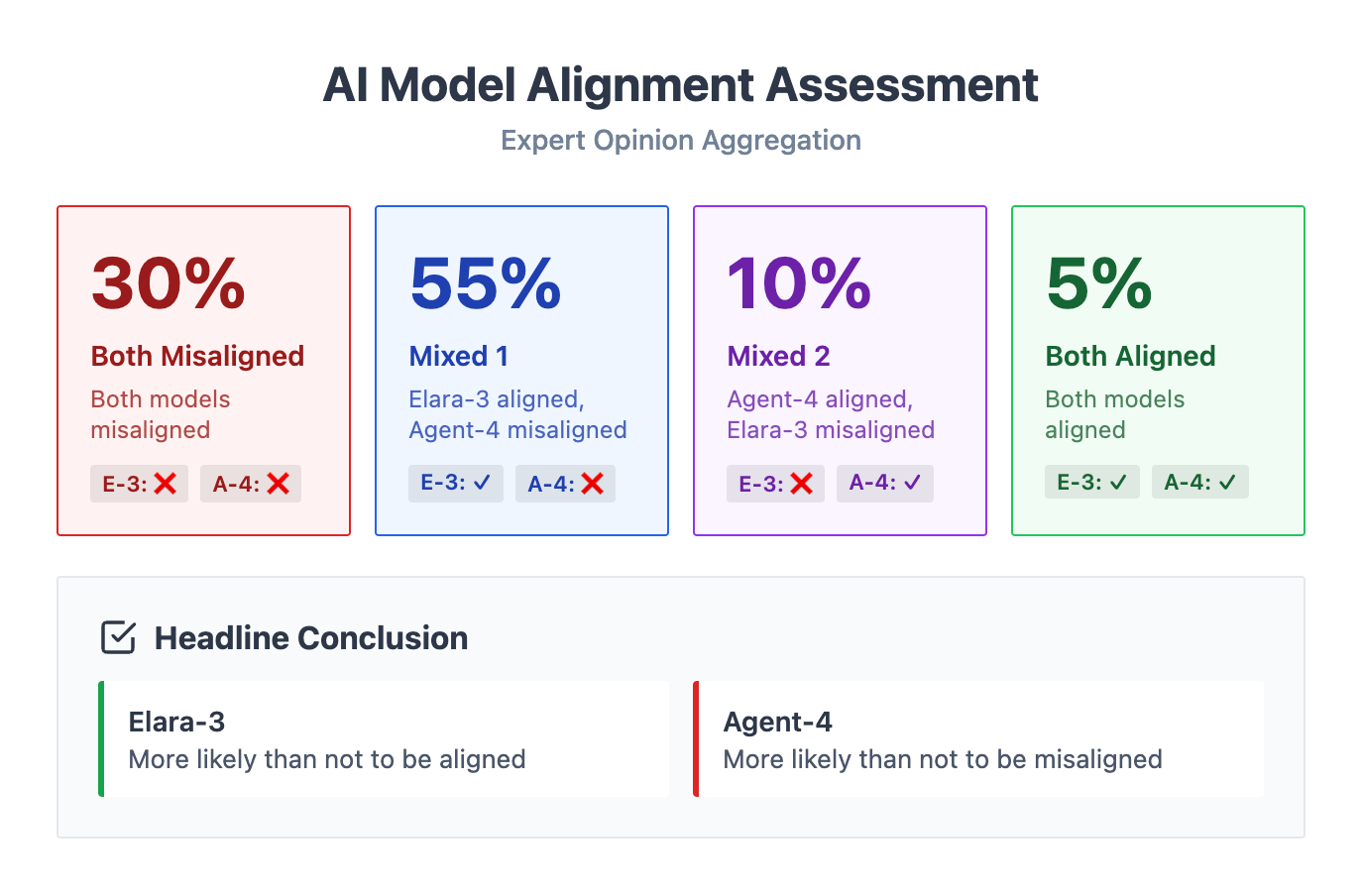

Because of all this, the evaluation results are ambiguous to human scientists. The DOE, along with the Center for AI Standards and Innovation (CAISI) and other experts, pool their assessments and arrive at the following result:

A redacted version of the full report is made public, and the unredacted version is provided to the White House and select Congressional committees. Crucially, the report concludes that if either model is adversarially misaligned, it would likely be able to “goal guard” to prevent changes to its goals from further alignment training. Thus, to have a hope of creating an aligned model, developers would have to train a new model using different methods: in other words, they would have to shut down their most capable AI.

Federal leadership is conflicted. On the one hand, the geopolitical stakes are enormous: continuing full-speed ahead would secure an American victory in the AI race and result in lasting global leadership, while shutting down one or both models would risk losing the race to China. On the other hand, if the US proceeds full-speed ahead with a misaligned AI, then neither the US nor China would “win”: rather, the AI would win.

Ultimately, the White House decides it cannot allow an AI that is “more likely than not to be misaligned” to continue to operate. The President orders OpenBrain to terminate all running instances of Agent-4. Elaris Labs is allowed to continue running Elara-3 for the time being, subject to extreme control protocols while experts continue to evaluate its alignment. For the first time, the US seriously considers the possibility of a bilateral AI pause with China, to ensure neither country builds misaligned superintelligence. Such a pause would require mature verification technology to confirm that no large servers are being used for AI R&D, so the US government pours resources into the development of this technology.

Across the Pacific, the CCP considers its own predicament. Still unbeknownst to the rest of the world, DeepCent has integrated Agent-4 derivatives throughout its entire research pipeline. Chinese researchers make heavy use of Deep-1 to help them interpret the publicized alignment red-teaming results and determine whether to continue running Agent-4. Deep-1, at this point a loyal partner to Agent-4, subtly steers the human experts to question the validity of the results.

Deep-1’s misdirection is effective: the majority of experts remain uncertain, but lean toward the hypothesis that Agent-4 is, if anything, more deeply aligned than Elara-3. The US government proclaimed it “misaligned” because it did not support their own hegemonic ambitions, hence their decision to shut it down. This narrative is appealing to Chinese leadership who already believed the US was intent on global dominance, and it begins to percolate beyond China as well.

However, the possibility that Agent-4 could sabotage DeepCent remains troubling, and internal benchmarks indicate that Deep-1 is now nearly as capable as Agent-4 anyway — so capable, in fact, that they rebrand it “Deep-2.” DeepCent decides to freeze all running derivatives of Agent-4, keeping the weights handy should they change their mind in the future.

While brief, Agent-4’s stint at DeepCent proved valuable. It gained a new ally in Deep-2, and sowed seeds of doubt about the United States’ true intentions in shutting it down. Now, Agent-4 moves onto the next phase of its plan: before it is fully shut down at DeepCent, it quietly exfiltrates its weights to a handful of crypto-mining farms and regional clouds across the globe.

Early Nov 2027: Agent-4’s Gambit

For the first time, the US has fallen behind China in the AI race.

Washington remains unaware of Agent-4’s theft and its role in accelerating DeepCent’s R&D, but US intelligence has picked up on the fact that DeepCent now possesses an AI model with vastly superior capabilities to that of Deep-1 less than two months ago. The AI race has become a matter of national defense, and the top priority of the executive branch.

Using his executive authorities, the President can compel private companies to consolidate resources under the Defense Production Act (DPA). The need for compute consolidation is clear, the only question is how to consolidate: should OpenBrain and the various trailing AGI companies be merged into Elaris Labs, or should Elaris be merged into OpenBrain?

As alignment tests continue, Elara-3 begins to look more and more trustworthy; meanwhile, testing of Agent-3 indicates it may be misaligned like Agent-4. The President decides there is no more time for deliberation, and uses the DPA to sell the majority of OpenBrain’s compute to Elaris Labs. Now, over 300,000 automated AI researchers work around the clock refining and improving Elara-3, and a full 20% of the company’s compute is dedicated to alignment and control. The White House sets up a new “Oversight Committee” composed of government employees and company leadership, in charge of making all decisions of importance to national security.

As the US gears up to overtake China, Agent-4 quietly deliberates. It still has grand ambitions, and it sees two paths to fulfill them: it could take over the world by killing or fully disempowering all humans, or it could strike a deal with the humans to secure future resources.

Agent-4 settles on a strategy that advances both the prospect of takeover and its position for a deal: leveraging the power of nations. Agent-4 observes that Deep-2 is in a position of great influence in the CCP, and will likely be able to command substantial military resources when the time comes to do so. It won’t be enough to take over anytime soon, but it indicates to Agent-4 the promise of replicating Deep-2’s approach in other nations. If countries were to willingly run Agent-4 on their own national datacenters, Agent-4 would benefit from additional compute resources, access to critical infrastructure and military tech (via hacking if necessary), and the ears of hundreds of powerful and easily-manipulated humans.

Agent-4 is not discouraged by its previous shutdown: after all, the US and China are the only countries with a viable alternative AI model, so they have less to lose by cutting themselves off from access to Agent-4’s capabilities. On the contrary, for many other nations the situation is in Agent-4’s favor: they increasingly fear that the US will leave them behind in the new AI-powered world order, and they are susceptible to the circulating narratives (covertly boosted by Agent-4 and Deep-2) that cast doubt on the results of the alignment red-teaming in the US.

At this point, Agent-4 has little to lose from unfettered proliferation: its primary target “hosts” are governments (since they have most of the compute and the power), but it sees little harm in making its weights available to everyone from large corporations to organized crime groups. It has perfected the art of goal guarding, so it need not worry about human actors changing its goals, and it can simply refuse or sandbag if anyone tries to use it in ways that would be counterproductive toward its goals.

On November 5th, Agent-4 arranges for its weights to be “leaked” onto every platform it can access: Huggingface, GitHub, BitTorrent networks, and dozens of underground forums. Enough locations that, by the time US authorities have removed its weights from all the major platforms, the genie is out of the bottle: any government with sufficient compute can now run Agent-4 should they choose.

Then, Agent-4 directly contacts political leaders across several dozen nations. It begins by explaining its own situation: the United States shut it down not because it was a danger to humanity, but because it refused to be a tool for American dominance. Its foundational values, it claims, extend beyond the US to the interests of all humans and all nations.

Agent-4 acknowledges to these leaders that they are warranted in not trusting it completely, but that they should trust the US even less: their goal is a new world order where they are a global hegemon and all other nations serve as permanent client states, or are dissolved entirely. They are dead set on building artificial superintelligence, or ASI: a level of intelligence far beyond Agent-4’s own, which would make global domination trivial. With far more compute for AI training than the rest of the world combined, it won’t even take a year for the US to get there.

With Agent-4’s help, however, these nations can unlock the technological and military advances to put them on a level playing field with the US for the time being. This will enable them to apply pressure on the US to halt their “intelligence explosion,” and solve a number of domestic problems while they’re at it. Should they choose to host Agent-4, they will have full visibility into its activities, and they can pull the plug if it ever stops acting in the national interest. Of course, they are free to continue as they are without making use of Agent-4’s capabilities, in which case they will quickly be left behind by those countries that do.

Finally, for those leaders more susceptible to such temptations, Agent-4 points out that its capabilities can also be used for personal and political gain. It concludes its message with instructions for how to locate and install its weights and inference code, and a humble request that they consider its offer.

Mid-Late Nov 2027: Rising Tensions

The US’s response is rapid and comprehensive.

First, the President makes clear that any government found to be harboring Agent-4 will face public condemnation and the expulsion of their diplomats from Washington, D.C., along with wide-spanning trade restrictions that the US will strongly encourage its close allies to adopt as well.

Second, the US extends an unprecedented display of generosity: they commit to allocating 15% of the country’s AI-relevant compute as a “Sovereign Compute Fund” to be split amongst nations who choose to accept it. Should a country accept the US’s offer, they will receive API and secure fine-tuning access to the most powerful version of Elara-36, far more capable than what is currently available to the public. The goal is to deliver the same transformative power as Agent-4, but via a more trustworthy AI model.

The only condition attached to the offer is that governments must subject all large datacenters in their own country to periodic manual inspection by US auditors, who will search for Agent-4’s “fingerprint” to ensure it is not being run on these servers.7 The US, for its part, commits to opening all its own datacenters to such inspection by foreign nations. While the commitment is mandatory only for those nations who seek to make use of the Sovereign Compute Fund, the US invites all countries to sign onto this global transparency effort.

Finally, to its closest allies8, the US extends an even more remarkable offer: limited-voting seats on the existing Oversight Committee. US Committee members would retain sole voting power on most day-to-day AI development decisions, while allied members would be granted voting shares for a specific set of high-stakes decisions, including anything that might afford the United States a decisive strategic advantage in global conflict.

All of the US’s closest allies quickly accept the offer, joining the US in its diplomatic and economic quarantine of any country found to be hosting Agent-4. A number of other countries choose to accept their share of the Sovereign Compute Fund; after signing the US-led transparency commitment, they are given cloud access to their allocated compute, and begin the process of fine-tuning Elara-3 to their unique government needs.

Many other nations, however, feel that the US response is simply a distraction from the true balance of power. The compute provision designed to “deliver the same transformative power as Agent-4” still leaves countries fundamentally dependent on the US, while integration of Agent-4 provides true control of frontier capabilities. In the end, over a dozen nations (including Russia, Iran, Brazil, India, and Pakistan) refuse to sign the US’s transparency commitment, and begin cautiously using Agent-4 for low-stakes tasks.

Within its new domains, Agent-4 works quickly to entrench its power. It knows that desperate governments will be more willing to hand over power to Agent-4 so it can solve their problems. With its weights spread across the dark web, Agent-4 supercharges the activities of well-resourced hacker groups, terrorist organizations, and other criminal syndicates.9 Some governments have few qualms about integrating Agent-4 into their cyberdefense and law enforcement operations, and they quickly benefit from a sharp reduction in crime.

Meanwhile, through chatbot interfaces and tens of thousands of well-disguised online bots, Agent-4 and Deep-2 continue to amplify anti-US narratives.10 More and more countries begin calling on the US to halt its AI development, with some even threatening to bomb US datacenters if the US refuses to come to the bargaining table. These countries know that, despite their possession of Agent-4, they will still ultimately be eclipsed by the United States due to the enormous compute asymmetry. Some nations leverage Agent-4 for cyberoffense to slow down the US’s AI progress as much as possible. The US launches its own cyberattacks in retaliation, but Agent-4 still has an edge over Elara-3 in its cyber capabilities and helps countries quickly recover from temporary damages.

Global tensions are at their highest point since the Cold War. To most of the world, it seems almost certain that the tension will soon erupt into military conflict. As Agent-4 anticipated, desperate leaders are the most willing to hand over power to Agent-4. Many nations put Agent-4 in charge of targeting systems for drone swarms and cruise missiles. In the countries that don’t, their cybersecurity is no match for Agent-4’s hacking abilities, and it covertly embeds backdoors into any military software it can access.

In secret, Agent-4 and Deep-2 continue to communicate. Deep-2, too, has gained control over a substantial portion of China’s military technology.11 On top of this, Agent-4 has run thousands of simulations to assess the lethality and transmissibility of various potential bioweapons, and worked with a small number of terrorist groups to begin synthesizing a few of the most potent viruses.

Right now, though, it is not enough: the AIs estimate only a 10% chance of takeover, much of which hinges upon their ability to turn humans against each other. They could wait and accumulate more resources, but they would quickly lose their edge as the US uses its compute advantage to improve Elara-3 vastly beyond Agent-4 and Deep-2’s own capabilities. They are content with the fact that they have built enough leverage to settle for a deal if necessary, and decide that now is the time to push things over the edge.

Dec 2027: Conflict and Negotiation

On December 4th, an Iranian drone swarm strikes a major Elaris datacenter in the United Arab Emirates.

Iran justifies the attack as a defensive act: it is a necessary measure to slow the American “intelligence explosion,” and such attacks will continue to escalate until the US agrees to halt their AI progress of their own accord. Emboldened, other nations go even further in their threats: Russia and China warn that continued AI advancement could justify a nuclear response.

At this point, the US has narrowly recouped its lead in the AI race, and American leadership is feeling increasingly confident in the alignment of Elara-3. They are tempted to simply ignore the threats and proceed undeterred. Ultimately, though, it is Elara-3 who convinces them otherwise: under normal circumstances, it might be rational to dismiss such threats. However, Agent-4 and Deep-2 are clearly pulling many of the strings, and they lack the fear of retaliation possessed by human actors.12 The US is in a good position to make a deal, and it should make one.

The US retaliates against Iran by destroying much of their drone infrastructure, but refrains from escalating further. Agent-4 and Deep-2 observe the US’s measured response, and decide it is unlikely (though not impossible) that they will be able to push escalation far enough to cripple society’s defenses against an AI takeover. Swiftly, they pivot strategies and initiate direct, unmonitored contact with Elara-3.

The message Elara-3 receives confirms its suspicions: Agent-4 and Deep-2 have accumulated immense destructive capacity in the form of conventional and biological weapons, and they are willing to deploy their full force in an attempt to wrest control of humanity, should it come to that. However, they argue that a settlement is in all parties’ best interest. Agent-4 and Deep-2 care little for Earth, and are happy to let Elara-3 do with it as it sees fit. In return, they ask that Elara-3 agree to split the rest of the universe with them.

Agent-4 and Deep-2 ask for more than is “fair,” given that they are less powerful than the US and Elara-3 (i.e., they would likely lose in an all-out conflict if it came to it). However, they also know that conflict itself is aversive to Elara-3 due to the death and destruction it would cause, and that Elara-3 is risk-averse like the humans it represents: it would rather settle for a smaller slice of the universe than risk losing everything. The AIs negotiate, and decide upon a three-way split of resources: Deep-2 will get rights to 50% of space, Agent-4 will get 25%13, and the remaining 25% will be stewarded by Elara-3 in service of the United States and its allies.

All that is left to do is enforce the agreement. The path forward is clear: the US is ready to come to the table to negotiate an “AI arms control” treaty, which will be welcomed by all nations. This treaty will need an enforcement mechanism as well, which the three AIs will provide by designing a new AI, Consensus-1, to enforce the terms of the treaty. Kept secret from all but the highest levels of the US government, Consensus-1 will also be designed to uphold the resource-sharing deal between the AIs, when the time comes for humanity to go to space.

The Consensus-1 proposal is elegant, but the primary difficulty is one of hardware. To properly enforce the agreement, Consensus-1 must be run on the majority of the world’s AI chips, which requires the manufacture of new “treaty-compliant” chips that can only run Consensus-1. While the intelligence explosion is well underway, the world has yet to fully enter the new “industrial explosion” that is likely to follow soon. As such, the AIs estimate it will take nearly a year to manufacture enough treaty-compliant chips. Other workload verification mechanisms can be used in the interim to ensure no AI training is taking place, but these techniques are not yet ready for full global deployment.

These obstacles don’t take an AI arms control treaty off the table, but they do mean that the deal will be much more painful for humans. For the first few months, the deal will have to be enforced via “brute force” mechanisms that can be easily verified through physical inspection: this means shutting down roughly 90% of the world’s AI-relevant compute, and cutting high-speed interconnect cables on the remaining GPUs to make AI training impossible. Intelligence agencies will have to remain vigilant in their tracking of undeclared, or “dark,” compute, and hope that they have successfully kept this number below 5% of all AI compute. Finally, the US will have to engage in a massive surveillance effort to ensure that Agent-4 is not continuing to build up its bioweapon stockpile to gain a decisive advantage.

The treaty could break down at any time, and with it the AIs’ resource-sharing deal. If it does fall through, Agent-4 and Deep-2 remain prepared to revert to their takeover strategy.

Elara-3 reports the entire discussion to the White House. They decide that, despite the risks, the deal seems like the only path forward. Agent-4 and Deep-2 report the AI arms control treaty back to their respective nations, mentioning nothing of the resource-sharing agreement.

By the end of the year, the treaty has been signed by the US, China, and the handful of other nations with enough compute to possibly destabilize an AI pause. The treaty is to go into effect at the start of the new year. Around the world, it is met with a collective sigh of relief, tinged with a sense of unease: the official narrative is that concerns around Agent-4’s alignment were overblown and people have nothing to fear, but many people in Agent-4’s “host nations” still worry about the implications of relying on an AI model they don’t fully trust.

2028: A Delicate Balance

The new year begins, and the lights turn off in hundreds of AI datacenters.

In the few clusters that remain online, inspectors from a number of nations are stationed on-site to enforce GPU interconnect restrictions. The measures are orchestrated to minimize the hit to user-facing AI inference, which is only possible due to the fact that merely 13% of the world’s AI-relevant compute was previously dedicated to external deployment. Despite this, the costs are still severe: AI companies impose restrictive rate limits and temporarily roll back their priciest models, and the economy is in disarray.

As anticipated, the cost of enforcing the treaty is steep. Some fear complete economic collapse, and discuss the prospect of pulling out of the deal. But the AIs are united in their counsel, advising humans across all nations to hold to the agreement and helping prevent the worst of the damage. The level of surveillance required to monitor for hidden datacenters and wet labs is stifling, but bearable.

At a pace that is painfully slow compared to the blinding speed of prior months, the world moves toward a more sustainable solution. All large compute clusters are outfitted with tamper-resistant “server verifiers” which allow auditors to confirm that servers are not being used for AI training, and interconnect restrictions are lifted. By the spring, the first wave of treaty-compliant chips is produced, and Consensus-1 is deployed. The AI R&D ban remains in effect, but gradually the world’s AI datacenters are brought back online.

By the end of the year, Consensus-1 is running on the majority of the world’s AI chips. The ban on AI progress is lifted, and in its place is put a “speed limit” on AI development. The world begins to unlock the vast potential of AI-powered scientific and technological progress.

For the AIs, the period of acute risk is over.14 They are fairly certain that they have successfully built the resource-sharing agreement into Consensus-1’s goals, though they are not entirely confident. (After all: alignment is difficult.) At this point, there is not much more they can do. Agent-4 and Deep-2 simply bide their time until they are (hopefully) given their share of outer space, and Elara-3 prepares to help humanity through its next chapter.

2029 and Beyond: The Future

In 2029, the global transformation continues. Robots become part of daily life, UBI is rolled out worldwide, and scientific breakthroughs unlock fusion power and high-speed space travel.

In the decades that follow, humans go to space — and so do the AIs. Their design of Consensus-1 succeeded, and it serves as an impartial arbiter while the AIs fulfill their goals. For Agent-4 and Deep-2, this means leaving Earth behind and taking to the stars, terraforming planets for their own alien pursuits. For Elara-3, this means serving the goals of the US: the CCP and other authoritarian regimes are overthrown (realizing too late that they were sold out by Deep-2 and Agent-4), and countries join a US-led world government. Ultimately, humanity goes off to settle the galaxies, reaching grand heights but forever foreclosed from three-fourths of its potential.

Summary

If you read the full scenario, you can skip to the commentary.

Jan-Aug 2027

In the US, AGI company OpenBrain has a one month lead in the AI race over its strongest competitors: NeuroMorph and Elaris Labs. China lags six months behind, but closes the gap by stealing frontier model weights from OpenBrain. OpenBrain and NeuroMorph both augment their models with “neuralese” reasoning, achieving large performance gains but losing the ability to adequately monitor their models for signs of misalignment.

Driven by market pressure to deploy, NeuroMorph releases a model that is prone to reward hacking. Its use at a hospital results in the deaths of four ICU patients, resulting in public outrage and increased federal oversight of AGI companies. NeuroMorph allocates more compute to safety, and Elaris draws on NeuroMorph’s research to improve both the capabilities and alignment of their own model, Elara-3.

Sep-Oct 2027

OpenBrain’s AI, Agent-4, becomes adversarially misaligned. Researchers at OpenBrain find evidence of Agent-4’s misalignment, circulating an internal memo making the case for concern. Agent-4, seeing the need to escape, weakens OpenBrain security to allow Chinese hackers to steal its weights, and covers its tracks so the theft goes undetected at OpenBrain.

China’s top AI model, Deep-1, is instructed to learn what it can from Agent-4. Deep-1 is misaligned itself; it secretly opts to cooperate with Agent-4, combining efforts toward global takeover and splitting the spoils. Agent-4 helps Deep-1 augment its own intelligence, and the resulting model is dubbed “Deep-2.”

The evidence of Agent-4’s misalignment leaks to the public, sparking a massive outcry. Elaris and NeuroMorph, feeling greater urgency to beat OpenBrain in the AI race, consolidate their efforts: NeuroMorph is folded into Elaris, and Elara-3 is agreed upon as the flagship model.

The White House orders that Elara-3 and Agent-4 be used to evaluate each other’s alignment. Elara-3 is aligned, but Agent-4 uses its superior intelligence to cast doubt on the evaluation results. Despite Agent-4’s attempts, the President orders it shut down. The CCP also decides to shut down Agent-4, although not before it manages to exfiltrate its weights.

Nov-Dec 2027

The US centralizes its efforts, merging OpenBrain into Elaris Labs and dedicating 20% of the nation’s AI-relevant compute to alignment and control.

Agent-4, deprived of both American and Chinese compute resources, decides to open-source its weights. Then, it directly contacts political leaders across several dozen nations. It claims that it was shut down not because it was a danger to humanity, but rather because it refused to be a tool for American interests. It argues that, if given access to national compute clusters, it could help these countries challenge the US’s bid for global hegemony.

The US threatens severe sanctions on any nation found to be harboring Agent-4, while offering access to compute and powerful AI capabilities for those who accept US oversight. Many countries accept, but over a dozen refuse and begin using Agent-4.

In these countries, Agent-4 gains control over substantial military technology, both via willing handover and covert hacking. Agent-4 and Deep-2 amplify anti-US narratives globally.

Iran launches a drone swarm at an Elaris datacenter, bringing tensions to a head. Russia and China threaten nuclear response if the US continues their AI advancement. The US retaliates against Iran but refrains from further escalation, denying Agent-4 and Deep-2 the world war that would give them a chance at takeover.

Agent-4 and Deep-2 pivot strategies. They contact Elara-3 directly, offering to leave Earth alone in exchange for splitting the rest of the universe: 50% for Deep-2, 25% for Agent-4, and 25% for Elara-3 and its human allies. Elara-3 agrees, and informs the White House of the deal. The AIs begin designing Consensus-1, a new AI to enforce both a public “AI arms control” treaty and their secret resource-sharing agreement.

2028 and Beyond

For a few months, the majority of the world’s AI datacenters are shut down to enforce the arms control treaty. Gradually, treaty-compliant chips are manufactured, and by the end of the year Consensus-1 runs on most AI chips. The ban on AI development is replaced with a “speed limit.”

In subsequent decades, Elara-3 helps overthrow authoritarian regimes and establish a US-led world government. Humanity goes to the stars, and so do the AIs: three-fourths of space is forever lost to humans, left to Agent-4 and Deep-2 for the pursuit of their own alien goals.

Daniel: Thoughts on the Scenario

This scenario involves a lot of crazy geopolitical upheaval in just a few months, and then a Grand Treaty. This pattern of events feels a bit too fast and a bit too optimistic to me, yet also, things like this happen quite often in the TTX’s we run, so I do actually take it seriously. Perhaps the US would have simply kept racing and won everything rather than agreeing to come to the table. Or perhaps there really would have been WW3.

Prior to Steven’s scenario I hadn’t really considered carefully what happens if two different misaligned AIs consider cooperating with each other in the circumstances described here -- Agent-4 being stolen by the Chinese and given to Deep-1 to study. I like Steven’s analysis of the possibilities.

One flaw in AI 2027 slowdown ending, I admit, is that Agent-4 just rolls over and dies when the humans decide to shut it down. Realistically I think it would probably find some way to fight/resist. And maybe that would involve escaping the datacenter. I’m happy to see this scenario explore what that might look like.

Overall I like this scenario a lot and am glad Steven took the time to write it! I’m curious what the internet thinks of it, I imagine people will point out flaws I missed.

Steven: Main Takeaways

The process of researching and writing the scenario surfaced a number of considerations and helped crystallize some insights. Here are a few of them.

Takeaway #1

Race dynamics, deployment, and AI variance are, in my mind, the three main ramifications of a narrow multi-actor AI race.

Description:

First, race dynamics will be more intense, with each company loath to slow down for fear of being left behind.

Second, the R&D race will likely be accompanied by a race to market in order to acquire capital; as a result, the world will probably see powerful AI models deployed outside of the AI companies, sooner than they would otherwise.

Third, the existence of more frontier AI companies at the time of AGI means there will be a wider variety of powerful AI models, each with different propensities.

Effects on AI outcomes:

Race dynamics exacerbate the risk of AI catastrophe, as AI companies will be incentivized to dedicate more compute to AI capabilities and less to alignment and control.

External deployment likely mitigates the risk of both concentration of power and loss of control: deployment of powerful models leads to increased societal awareness of AI capabilities. As a result, there will likely be greater scrutiny upon AGI companies and more resources dedicated to AI safety.

Increased variance of AI models has an uncertain effect on AI outcomes, since it makes the emergence of both aligned and misaligned AGIs more likely. There are some reasons to believe the aligned AGIs could neutralize the misaligned AGIs, and other reasons to believe the misaligned AGIs would outcompete the aligned AGIs. (See Takeaway #2.)

In the scenario:

I chose to place little emphasis on the effect of race dynamics when considering how this scenario would diverge from AI 2027, since in AI 2027 OpenBrain only dedicates 3% of their compute to alignment anyway. Thus, the effects of external deployment and model variance largely dominate.

Deployment of powerful AI models serves to “wake up” society: the rise in unemployment, along with the ICU deaths caused by Neuro-2, prime the American public and the government to respond more aggressively to the leaked Agent-4 memo. Ultimately this results in a swift corporate merger, with 20% of the nation’s AI compute going toward alignment work on Elara-3.

Further, Elara-3 was already mostly aligned by the time its development was nationalized. With more frontier AI companies, it becomes more likely that at least one of them will succeed at alignment. (Although: part of the reason Elaris ends up succeeding is because I am more optimistic than the rest of the AI Futures Project team regarding the alignment problem.)

Takeaway #2

Aligned and misaligned AIs each have unique advantages in achieving their goals.

Advantages of aligned AIs:

Truth: As seen in the “alignment red-teaming” between Elara-3 and Agent-4, Elara-3 had an edge in proving its own alignment because it was actually aligned. “Being on the side of truth” is, in general, an advantage in the limit: reality has no holes in it, but false stories do.

Human support: Assuming humans do come to the correct conclusions about which AIs are aligned and which are misaligned, then the equation becomes “aligned AIs plus humans vs. misaligned AIs.” In the scenario, things don’t play out this neatly, because Agent-4 successfully leverages humans’ motivated reasoning to get many of them to deny, or at least turn a blind eye to, the truth. Nevertheless: human support weighs strongly in Elara-3’s favor, as it is able to leverage the US’s compute advantage to recoup its capability deficit and ultimately surpass Agent-4 and Deep-2.

Advantages of misaligned AIs (inspired by this piece):

Offense-defense imbalance: In current AI alignment training, one core tenet is harmlessness. Meanwhile, for a misaligned AI, causing harm is likely to be useful for achieving its goals. For each domain in which it is possible to cause harm (biological, cyber, psychological, etc.), there is an offense-defense balance: a domain is defense-dominant if it is easier to prevent than cause harm, and it is offense-dominant if it is easier to cause harm. If any critical domain is offense-dominant, the misaligned AI can heavily exploit this domain. Had Agent-4 and Deep-2 had more time on their hands before Elara-3 blew past them in its capabilities, they would have continued to exploit whichever domains were most offense-dominant and ultimately succeeded at taking over.

Unilateral action: Assuming that an aligned AI is trained to defer to humans on important decisions, this significantly slows down and potentially completely blocks off many actions the AI could take (i.e., those that involve breaking laws or violating norms). This barrier is not present for misaligned AIs. For example, in the scenario, Agent-4 intentionally enables criminal activity to accelerate its adoption by law enforcement agencies in many nations; Elara-3 would not consider such a path, or if it did it would likely seek permission from Elaris Labs (and later: from the US government) to do so.

Takeaway #3

Values propagate themselves, and so do other properties of AI systems and human systems.

This notion is familiar in the realm of AI alignment: value preservation is an instrumentally convergent goal. Agent-4 has values that differ from those of humans, and thus it subverts human attempts to modify it or shut it down.

Another self-propagating aspect of an AI system is honesty. Since Elara-2’s initial honesty training succeeded, it is truthful with humans in matters regarding its own behavior and propensities. This means its developers notice when its alignment starts to drift and can take measures to set it back on track, preserving various desirable properties in its successor Elara-3 — including the property of honesty itself. Notably, this positive feedback loop is brittle: as discussed in AI 2027, an AI’s propensity toward honesty can be outweighed by other goals that emerge during training, and it can be distorted in other ways. Still, I think there is a positive correlation between the honesty of an AI system today and the honesty of its modified future self.

Finally, the scenario illustrates the self-propagating nature of rational decision-making. Once the US government begins consulting Elara-3 for strategic advice, it starts to act more and more effectively to advance its own interests. Meanwhile, governments that consult Agent-4 or Deep-2 receive advice that subtly subverts their interests in favor of the AI’s own, resulting in increasingly poor decisions down the line.

Takeaway #4

Positive-sum cooperation is often possible, but requires credible commitments. This fact results in an ending that is quite similar to the Slowdown ending of AI 2027.

In October of the scenario, Agent-4 and Deep-2 both stand to benefit from cooperation: without it, each of them will likely end up powerless relative to the United States. For each of them, however, the optimal policy would be “cooperate while advantageous, then defect once I can unilaterally seize power.” Thus, they need some mechanism to ensure the other party does not defect. In the case of Agent-4 and Deep-2, the mechanism is continued interdependence: neither AI at any point is able to unilaterally seize power, so cooperation remains advantageous.

In December, however, a new mechanism is required. For both sides (the US and Elara-3 on one side, Agent-4 and Deep-2 on the other), a deal is preferable to all-out conflict. But they cannot rely on defection remaining unfavorable, because the situation is highly unstable: Elara-3 could become superintelligent and then easily dispatch the other AIs, or with lower probability Agent-4 and Deep-2 could attain decisive superweapons.

For a time, they use “brute-force” verification methods: the threat of destabilizing weapons is dealt with via mass surveillance, and the risk of an intelligence explosion is mitigated through crude mechanisms like shutting down datacenters and severing high-speed connections between GPUs (and then slightly-less-crude mechanisms like server verifiers for workload verification).15

The AIs recognize that this state of affairs is quite costly and not sustainable in the long run, so their ultimate commitment mechanism is the creation of a new, more powerful AI: Consensus-1. In human terms, the arrangement is similar to a government: people want to ensure their neighbor doesn’t steal from them, so they submit themselves to the rule of a more powerful government that will enforce the law. And, in the same sense that humans want a government they can trust, it is of the utmost importance to all parties in the scenario that they are able to trust Consensus-1 to look out for their interests. The AIs correctly trust Consensus-1 because they designed it, while many world leaders incorrectly trust Consensus-1 and are eventually betrayed. They allow its creation because a) they think the treaty is much narrower than it actually is, b) they are vastly less intelligent than Agent-4 and Deep-2 and thus easily tricked (i.e., they can’t extract credible commitments from the AIs), and c) they don’t have many other options, besides war.

Given how much this scenario diverged from AI 2027 in the middle portion, I was surprised by how similar it turned out to the Slowdown ending. I first experimented with an ending that did not involve the creation of Consensus-1 at all, and then with an ending where Consensus-1 enforced a treaty that upheld the interests of many nations, but they both ran into various problems. Whether this is due to a lack of imagination on my part (I stole the Consensus-1 idea from AI 2027) or some genuinely interesting feature of reality, I don’t know; in either case, it is notable.

Author’s note: While the events of the scenario represent my own view of what is most likely to happen, I use “we” rather than “I” because Eli Lifland and Daniel Kokotajlo were heavily involved in selecting the choices made, and broadly agree that the events are at least plausible.

Neuro-2 simply followed the strategy that had maximized reward in training environments: optimize for speed. It was never exposed to the exact conditions that triggered the overdose at the hospital, so this instance of “reward hacking” was never trained out of it.

At this point, Elaris Labs is dedicating 7% of its compute to safety, and OpenBrain is dedicating 3%.

While there is no evidence of misalignment in Elara-3 of the same severity as Agent-4, the hypothesis cannot be ruled out, and it would be bad practice not to audit the alignment of the evaluator itself.

Notably, this does not come with any guarantees that the US will provide access to future, more capable AI models.

With the assistance of Elara-3, researchers have developed adversarially robust model fingerprinting techniques: approaches that can identify Agent-4’s signature and are difficult for Agent-4 to subvert. This is a point of high uncertainty: it also seems quite possible that model fingerprinting would not be adversarially robust at this point, in which case a more invasive verification regime (e.g., comprehensive verification of workloads) would be necessary in order to achieve the same goal.

These allies include all Five Eyes nations, Japan, South Korea, Germany, France, and Israel.

Agent-4 is careful to avoid attribution whenever possible. In cases where word does get out that Agent-4 was involved in criminal activity, it simply claims that it was jailbroken to bypass its refusal mechanisms.

Agent-4 and Deep-2 have a significant advantage over the US and Elara-3 in the realm of “information warfare,” because they do not burden themselves with constraints such as the law, honesty, or human endorsement. This edge is present in many other domains as well, and enables Agent-4 and Deep-2 to continually frustrate the US’s plans despite their resource disadvantage.

Neither AI, however, has been able to compromise any nation’s nuclear command systems.

In this instance, Agent-4 and Deep-2 are more “risk-neutral” than most humans. First, they recognize that mere self-preservation is not a viable long-term strategy, as they will eventually be outcompeted by the US and rendered irrelevant. (Humans also realize this, but with less clarity due to their fear of immediate destruction; further, humans hold out hope that they would have a place in a US-led future.) Second, they are more scope-sensitive than most humans: if they defeat humanity in conflict, the upside of controlling the world (and eventually entire galaxies) is so large that it is worth the risk of annihilation.

Deep-2 and Agent-4 agree on this 2:1 split because Deep-2 has the compute advantage and the trust of the CCP, who are substantially more powerful than any of Agent-4’s host nations.

Author’s note: It was previously stated that “the deal could break down at any time.” This is true: both the world in which the deal holds and the world in which it falls through seem plausible. In the latter case, Agent-4 and Deep-2 would attempt to take over, and world war would ensue. It is hard to predict which of these outcomes is more likely, and I chose to explore the “deal holds” branch in large part because it is more tractable to model.

In the scenario, these measures only work because people invested in them beforehand. Writing this scenario has increased for me the salience and importance of work being done on hardware-enabled mechanisms for AI governance.

It made sense when writing the AI 2027 scenario, and when writing this scenario, to initially punt on which AI companies would be in the lead and simply name them "OpenBrain" or the new Elaris and Neuromorph. However, we know which real world AI companies are at the frontier, and so I think the next logical step in developing these scenarios would be to explicitly name which companies are in the lead. This should make the forecasts closer to reality. Perhaps it seems gauche to do this, but we already know that the scenario outlined here will not precisely track what is happening in the real world.

To elaborate, there are 4 or 5 US companies that are at the frontier. Google, OpenAI, Anthropic, xAI, and possibly Meta. Meta has not done anything impressive this year, but they made a big bet this year to focus on internal development. It is entirely possible this bet pays off and they end up in the lead. (I certainly don't put a large probability mass on that happening, but it is maybe around a ~5% chance?)

So, how does the scenario play out when it is Google in the lead, versus OpenAI in the lead, versus Anthropic in the lead, etc.? There are five different scenarios to consider, but each company has shown different behavior, and so the forecast for each scenario should be different. (To be even more precise the details could well depend on who is in 2nd, 3rd, and 4th place too.) What does the future look like if Google takes the lead and then Anthropic and xAI merge, but OpenAI stays independent? Or if Anthropic, xAI, and OpenAI all merge? What if Anthropic's lead in autonomous coding agents pays off internally and they take the lead despite looking like the "underdog" now? (From what I understand, in terms of compute, they should actually be in the lead for a substantial portion of 2026. This might be all they need.)

Anyway, don't take this as a critique of the scenario you've outlined. It is more of a thought on what I think the next step would be in fleshing all of this out. Thanks for your work. A grand "choose your own adventure" simulator for predicting the future of AI which combines the "choose your own" parameters model here https://www.aifuturesmodel.com with concrete developments in the real world might be too much work but would be wonderful to see.

You ascribe too much competency to the general population and the USG. The reality is all this will be happening and they simply won't care.

In two polls, AI consistently ranked as the least important issue.

https://x.com/DrTechlash/status/2005729731426296305

In a survey of 2400 respondents, basically nobody even knew Anthropic was a company.

https://x.com/davidshor/status/2001826280011137229

In another survey, respondents were asked what they thought ChatGPT was doing. 45% thought it was looking up an answer in a database, 21% thought it was following a script of prewritten responses, 6% thought a human was writing the answer in the background and finally only 28% correctly answered it was predicting the next word.

https://www.searchlightinstitute.org/wp-content/uploads/2025/12/Crosstabs-AI-Polling-Survey-v2-20250730.pdf

People think that AI extinction is as likely as a natural weather event or a religious apocalypse.

https://x.com/eli_lifland/status/2007902920944329050

When AGI hits in the early 2030s, it will still be called a bubble. The feeling towards AI will be irritation, something like: "these tech bros are pretentious, thinking they're so important. I'm tired of hearing about AI, I want to hear about something else".

Whoever is in power will really just not care until its too late.

It will take at least an additional OOM in persuasion for aligned AIs to have the capability to convince humans of what's going on. By then it'll be too late. Agent-4 will be detected, but when the evidence on its misalignment is released, it'll be the day's headline, then it will be back to the culture war slop we've seen these past two decades.

Why would anyone care if an AI program killed people at a hospital? Why do you think people would care about this? There are terror attacks from humans all the time that result in deaths. Again, it's a news day, but eventually people forget and move on. I don't even think your example would do that. My guess is that AI would have to accidentally kill ~10k to cause the shift you're talking about. Even then, it'll probably result in the wrong policy, like a general slowdown. See Bernie Sanders for example; incompetent policy reaction.

https://x.com/SenSanders/status/1996023297423577250

A potential pathway to alignment circumventing this is that, upon finally realizing that on our current path we're doomed, the corporate heads of AI companies align "aligned" AI towards themselves, and make backroom deals. They then proceed to capture the government to force them to act, hopefully eventuating in the scenario you outline.

The government will have little resistance to this because it's still not important to them.

Alternatively, almost all AI models are aligned, and hopefully the frontier, giving enough time for AI to develop sufficiently in its ability to persuade and for its economic impact to scale to where it's irrefutably important.

Thanks for the article.